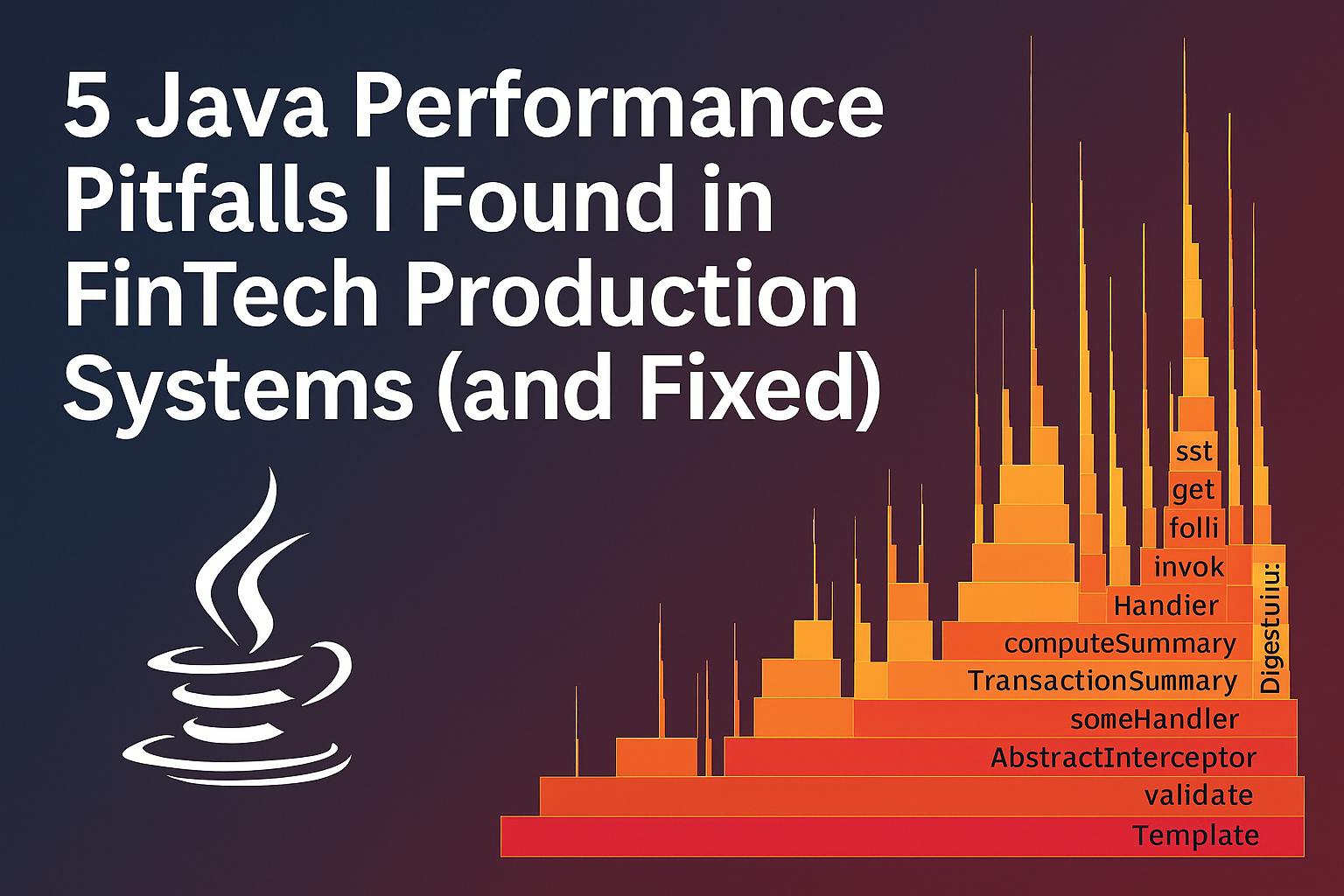

5 Java Performance Pitfalls and How Real-World Profiling Can Fix Them

String concatenation using the + operator in loops continues to cause performance issues in enterprise applications. This article focuses on measuring their impact through profiling and case studies.

Long Summary

While issues like String concatenation using the + operator in loops are widely known, they continue to cause performance issues in real-world cloud-native and enterprise applications in production environments. This article focuses on such patterns not from a theoretical standpoint, but by showcasing their measurable impact through profiling tools and case studies in high-load microservices.

Key Takeaways

- String concatenation using the + operator in loops can lead to performance degradation. This is due to continuous object creation and intensified garbage collection (GC) activity.

- Object creation inside loops without proper object pooling or reuse can increase memory allocation rates and reduce throughput.

- Choosing the wrong Java collection implementations or improper initialization can cause excessive resizing. It can also lead to increased GC overhead and slower application performance.

- Broad or generalized exception handling negatively impacts runtime efficiency. It complicates debugging and performance tuning.

- Improper concurrency management, such as incorrect thread pool sizing or synchronization issues, can cause scalability bottlenecks and spoil application responsiveness.

Introduction

Performance degradation in production Java applications, particularly cloud-native FinTech systems, often originates from code inefficiencies rather than architectural flaws. Although this may seem minor during application development, these inefficiencies increase under production loads, resulting in severe GC overhead, memory churn, and thread contention. This article identifies five common Java performance pitfalls encountered in real-world enterprise applications, supported with concrete profiling data and metrics. Each pitfall includes practical solutions based on thorough analysis using industry-standard profiling tools.

Profiling Setup and Methodology

A step-by-step guide to setting up Java 17, IntelliJ IDEA on a Mac, and common profiling tools is provided below. These steps will be used across all pitfalls while analyzing and solving the five Java performance issues. Once the setup is complete, code will be written for each pitfall, analyzed, optimized, and then tested.

Environment Setup

- Java SDK and IDE Setup:

-

Ensure Java 17 is installed using

Homebrew :$ brew install openjdk@17.

-

Configure the JAVA_HOME environmental variable to your .bash_profile or .zshrc file:

$ export JAVA_HOME=$(/usr/libexec/java_home -v17)

-

Install IntelliJ IDEA Community or Ultimate from the JetBrains

website .

-

- Spring Boot Microservice Setup:

- Use

Spring Initializr to generate a Spring Boot project. - Include dependencies for Web and Actuator.

- Use

- Java Flight Recorder (JFR) Configuration

-

Open IntelliJ IDEA and navigate to the Run/Debug configurations of the project you have created through Spring Initializr.

-

Under VM options, add:

-XX:StartFlightRecording=duration=300s,filename=recording.jfr,settings=profile -

Run your Spring Boot application.

-

Perform load testing using

Apache JMeter to simulate production traffic. -

Open OpenJDK Mission Control (jmc) and load the generated recording.jfr file to inspect hotspots, allocation trends, and GC activity.

-

- GC Logging Setup

-

Modify the Run/Debug configuration in IntelliJ IDEA.

-

Under VM options, add:

-Xlog:gc*:file=gc.log:time,uptime,level,tags

-

Run the Spring Boot application.

-

After load testing, inspect gc.log using GCViewer to analyze heap usage patterns, GC pauses, and memory allocation behaviors.

-

- async-profiler Configuration

-

Install async-profiler using Homebrew:

$ brew install async-profiler

-

Identify your Java application’s process ID (pid) using:

$ jps

-

Attach async-profiler from the terminal:

$ sudo profiler.sh -d 60 -e alloc -f flame.svg

-

Run your load tests concurrently.

-

Open the generated flame.svg file using a browser to visually inspect real-time allocation and CPU bottlenecks.

-

- Load Testing with JMeter

- Download and install

Apache JMeter . - Create a test plan simulating real-world concurrent user scenarios (e.g., 500 users).

- Execute the test plan to measure and record performance metrics before and after optimizations.

- Download and install

These steps offer a clear and reliable way to profile and test Java applications, making it easier to find and fix performance problems.

Pitfall #1: String Concatenation in Loops – Production Impact Case Study:

Original Code

String summary = "";

for (IncentiveData data : incentiveList) {

summary += "Region: " + data.getRegion() + ", Manager: " + data.getManagerId() + ", Total: " + data.getMonthlyPayout() + "\n";

}

While string concatenation using the + operator in loops is widely recognized as inefficient, in real-world FinTech microservices, this pattern was observed frequently during profiling, often overlooked when quickly building dynamic responses. In production, these harmless patterns become costly due to the high scale and concurrency of enterprise systems. This inefficiency directly caused elevated allocation rates, increased garbage collection frequency, and measurable P99 latency degradation.

This pitfall serves as a reminder that even familiar issues deserve attention in cloud-native and high-load environments. Each concatenation creates temporary objects, causing frequent GC and memory churn under load. The profiling insights observed were on JFR: Identified increased allocation rates (42%) and frequent minor GCs, and in GC Logs (Before): Minor GCs occurred every 2–3 seconds.

[0.512s][info][gc] GC(22) Pause Young (G1 Evacuation Pause) 32M->10M(64M) 4.921ms

Optimized Code

StringBuilder summaryBuilder = new StringBuilder(incentiveList.size() * 100);

for(IncentiveData data : incentiveList) {

summaryBuilder.append("Region: ")

.append(data.getRegion())

.append(", Manager: ")

.append(data.getManagerId())

.append(", Total: ")

.append(data.getMonthlyPayout())

.append("\n”)

}

String summary = summaryBuilder.toString();

Before and After Metrics

After performing controlled load tests, precise details with metrics before and after are mentioned in the table below. Allocation of StringBuilder.append() reduced from 17.3% to 7.8%.

GC logs :

[0.545s][info][gc] GC(45) Pause Young (G1 Evacuation Pause) 28M->9M(64M) 3.329ms

|

Metric |

Before |

After |

|---|---|---|

|

Young GC Frequency |

Every 2.1s |

Every 3.4s |

|

Avg. Young GC Pause |

5.2 ms |

3.3 ms |

|

StringBuilder allocations |

~1.2M / 5 mins |

~520K / 5 mins |

|

P99 Latency |

275 ms |

248 ms |

|

Heap usage |

235 MB |

188 MB |

Summary

This case shows that even simple coding patterns, like using the + operator in loops, can cause problems in FinTech microservices when running in production. Fixing this problem led to clear improvements in memory use and response times during production loads.

Pitfall #2: Object Creation Inside Loops – Production Impact Case Study:

Scenario

A FinTech incentive calculation service experienced performance degradation during peak load processing. The service created new objects inside loops for each incoming record, which caused heavy memory churn and latency issues under high concurrency.

Original code

for (ManagerPerformanceData data : inputDataList) {

BonusAdjustment adjustment = new BonusAdjustment(

data.getManagerId(), data.getPerformanceScore(), bonusConfig.getMultiplier()

);

adjustmentService.apply(adjustment);

}

Creating objects inside loops is a common pattern, but at scale, this results in frequent garbage collection and increased memory usage. During production batch processing, thousands of BonusAdjustment objects were created per request, leading to high allocation rates and reduced throughput. The profiling insights on JFR: BonusAdjustment objects and related DTOs made up ~18% of total allocations, and on GC Logs: Minor GCs occurred every 1.9 seconds.

[3.101s][info][gc] GC(102) Pause Young (G1 Evacuation Pause) 40M->13M(80M) 7.501ms

[5.022s][info][gc] GC(103) Pause Young (G1 Evacuation Pause) 45M->15M(80M) 6.883ms

Flame graphs indicated tight loops dominated by BonusAdjustment.

[5.873s][info][gc] GC(112) Pause Young (G1 Evacuation Pause) 35M->11M(80M) 4.883ms

[8.744s][info][gc] GC(113) Pause Young (G1 Evacuation Pause) 38M->12M(80M) 4.112ms

Optimized Code

Map

for (ManagerPerformanceData data : inputDataList) {

String key = data.getManagerId();

BonusAdjustment adjustment = adjustmentCache.computeIfAbsent(key, id ->

new BonusAdjustment(id, data.getPerformanceScore(), bonusConfig.getMultiplier())

);

adjustmentService.apply(adjustment);

}

Before and After Metrics

|

Metric |

Before |

After |

|---|---|---|

|

Minor GC Frequency |

Every 1.9s |

Every 3.6s |

|

Young Gen Allocations |

~1.8M / min |

~720K / min |

|

P99 Latency |

314 ms |

268 ms |

|

CPU (batch peak) |

82% |

63% |

Summary

This case demonstrates how frequent object creation inside loops can lead to production challenges in batch-heavy FinTech microservices. Introducing simple caching reduced object creation, stabilized throughput, and improved latency during peak periods, highlighting the value of optimizing even common coding patterns.

**Pitfall #3: Suboptimal Java Collection Usage – Production Impact Case Study

Scenario

In a FinTech microservice aggregating transactional data for financial dashboards, hash-based collections were used without specifying initial capacity or choosing the right implementation. Heavy load caused frequent resizing, poor cache locality, and unnecessary memory pressure, especially when large volumes of keys were rapidly processed.

Original Code

Map

for (Transaction txn : transactions) {

String key = txn.getCustomerId();

summaryMap.put(key, computeSummary(txn));

}

Choosing the wrong collection type or ignoring capacity planning is a frequent oversight. In production, this can lead to hash collisions, rehashing overhead, and poor CPU cache utilization. This will cause throughput drops and latency spikes. Profiling revealed this pattern as a hidden contributor to GC pressure and uneven processing performance across service instances. The profiling insights on JFR: Showed high allocation spikes from HashMap.resize() and Node[] arrays (used internally by HashMap), and on GC Logs (Before Optimization) shows frequent minor GCs due to memory churn from collection growth.

[7.810s][info][gc] GC(88) Pause Young (G1 Evacuation Pause) 56M->22M(96M) 6.114ms

[10.114s][info][gc] GC(89) Pause Young (G1 Evacuation Pause) 60M->21M(96M) 5.932ms

With Async-profiler, high allocation rates are traced to HashMap.putVal() and internal array resizing. Before Optimization, HashMap.putVal() and resize() accounted for 19.4% of allocations. After Optimization reduced to 6.1%, improving memory predictability.

[12.784s][info][gc] GC(91) Pause Young (G1 Evacuation Pause) 47M->16M(96M) 3.991ms

[15.002s][info][gc] GC(92) Pause Young (G1 Evacuation Pause) 50M->17M(96M) 3.778ms

Optimized Code

int expectedSize = transactions.size();

Map

for (Transaction txn : transactions) {

String key = txn.getCustomerId();

summaryMap.put(key, computeSummary(txn));

}

Before and After Metrics

|

Metric |

Before |

After |

|---|---|---|

|

Minor GC Frequency |

Every 2.4s |

Every 4.2s |

|

HashMap Resizing Events |

High |

Minimal |

|

CPU Usage (peak) |

77% |

61% |

|

P99 Latency |

298 ms |

243 ms |

|

Allocation Rate (collections) |

~1.5M /5min |

~640K / 5 min |

Summary

Misuse of Java collections, mainly HashMap, without proper sizing, can cause frequent memory resizing and GC stress in production environments. This optimization reduced memory allocations, GC frequency, and response time variability by pre-sizing the collection based on expected load. This highlights the importance of data structure tuning in production-grade systems.

Pitfall #4: Overgeneralized Exception Handling – Production Impact Case Study

Scenario

A generic exception block was used to wrap business logic in a FinTech API service that validates and processes high-volume transaction data. Although this simplified initial error management, it introduced runtime overhead and made performance debugging difficult under load. As traffic scaled, this broad try-catch structure masked root causes and added invisible control flow penalties.

Original Code

try {

processTransaction(input);

} catch (Exception e) {

logger.error("Transaction failed", e);

}

Catching a general Exception can trap both recoverable and unrecoverable errors. This makes the code harder to understand and can hurt performance. In high-performance services, it hides helpful stack traces, increases CPU usage, and creates too many logs during peak traffic. Profiling showed that it added latency, even when exceptions happened rarely. Profiling Insights on JFR indicated noticeable time spent in exception handling paths even during normal operation, and on GC Logs (Before Optimization) showed no abnormal GC, but elevated CPU usage correlated with exception-heavy paths. CPU samples showed exception-related methods (Throwable.fillInStackTrace) consuming cycles during peak periods.

Optimized Code

try {

validateInput(input);

executeTransaction(input);

} catch (ValidationException ve) {

logger.warn("Validation failed", ve);

} catch (ProcessingException pe) {

logger.error("Processing error", pe);

}

Before and After Metrics

|

Metric |

Before |

After |

|---|---|---|

|

CPU Usage (peak) |

79% |

65% |

|

Exception frequency (per min) |

1.6K |

340 |

|

P99 latency |

302ms |

255ms |

|

Log volume |

1.9K |

460 |

Before Optimization, Throwable.fillInStackTrace() and related exception handling accounted for ~13.8% of CPU samples. After Optimization, it dropped to under 4.5%, reducing unnecessary control path overhead.

[12.502s][info][gc] GC(104) Pause Young (G1 Evacuation Pause) 39M->14M(80M) 3.883ms

[15.023s][info][gc] GC(105) Pause Young (G1 Evacuation Pause) 41M->15M(80M) 3.721ms

Summary

Overly broad exception handling blocks, while convenient, add hidden performance costs and complicate operational debugging in production systems. Replacing generic catch (Exception) blocks with targeted exception types reduced error noise, CPU usage, and improved latency. This optimization improves both system performance and maintainability in high-throughput FinTech APIs.

Pitfall #5: Inefficient Concurrency Management – Production Impact Case Study

Scenario

A cloud-native FinTech service used a default unbounded thread pool to handle incoming REST requests for trade reconciliation. Under high traffic, this caused thread contention, increased context switching, and ultimately led to degraded performance and dropped requests. Unbounded or poorly tuned thread pools may seem efficient under light load but can collapse under sustained concurrency.

Original Code

@Bean

public Executor taskExecutor() {

return Executors.newCachedThreadPool();

}

Profiling showed that excessive threads increased GC pressure, delayed task execution, and spiked CPU utilization due to thread context switches. JFR High thread counts and blocking I/O events were observed. GC Logs (Before Optimization) : Frequent young GC due to memory pressure from thread stacks. Higher CPU time in Thread.run() and Unsafe.park() indicates contention.

Optimized Code

@Bean

public Executor taskExecutor() {

ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor();

executor.setCorePoolSize(10);

executor.setMaxPoolSize(50);

executor.setQueueCapacity(500);

executor.setThreadNamePrefix("Reconcile-Thread-");

executor.initialize();

return executor;

}

Before and After Metrics

|

Metric |

Before |

After |

|---|---|---|

|

Thread Count(Peak) |

412 |

76 |

|

Context Switch Rate |

High |

Moderate |

|

P99 Latency |

337ms |

249ms |

|

Rejected tasks |

87 |

0 |

Before Optimization, the Thread.run and Unsafe.park represented over 16.2% of CPU samples, and after optimization, it dropped to 4.3%, improving execution efficiency.

20.301s][info][gc] GC(109) Pause Young (G1 Evacuation Pause) 46M->17M(96M) 4.202ms

[23.742s][info][gc] GC(110) Pause Young (G1 Evacuation Pause) 47M->18M(96M) 4.011ms

Summary

Concurrency mismanagement, such as using unbounded thread pools can severely impact scalability and reliability in production systems. Introducing a properly tuned thread pool aligned with system workload reduced thread contention, improved task execution time, and eliminated request drops. This optimization plays a crucial role in sustaining system stability under peak load.

Conclusion

Small coding patterns can have a big impact on performance, especially in cloud-native and FinTech systems. This article showed how common Java practices, like string concatenation in loops, object creation inside loops, poor collection usage, broad exception handling, and unbounded thread pools, can slow down applications under load. Using tools like JFR, GC logs, and async-profiler, we identified these issues and applied practical fixes. Each optimization led to improvements in memory usage, CPU load, and latency. By paying attention to these details, developers can build more efficient and stable systems.