My Journey Down the Rabbit Hole of Vibe Coding

Building Vaani (वाणी) – A Minimal, Private, Universal Speech-to-Text Desktop Application

A few days back, I was religiously watching the latest video from Andrej Karpathy where he introduced Vibe Coding.

I have been using AI code assistants for a while but am still highly involved in the coding process. AI is mostly acting as a smart auto-completion, taking over tedious boilerplate stuff, writing docstrings, or occasionally explaining the code that I wrote yesterday and conveniently forgotten. Vibe coding sounded more like graduating from autocomplete to actual co-creation – a shift from AI as an assistant to AI as an actual coding partner.

Intrigued by this potential paradigm shift, I decided to experience it firsthand. In the same video, Andrej shared that he uses voice as primary input roughly 50% of the time as it’s more intuitive and efficient. He suggested some options for Mac which act as universal speech-to-text tools that work across multiple apps. I primarily work on Windows for interacting with apps and Linux over the command line. As I couldn’t find any good options for Windows (other than built-in Voice Access which is super slow), I decided to build one for myself. I had a few other potential ideas but settled for this as I wanted to build

- it in a programming language I know well – Python

- something I am conceptually unfamiliar with – audio capture and processing, native UI

- a tool that I would really use and not just a toy

- something useful for the community that I can open-source

- learn something new along the way

Goal – Build a minimal, private, universal speech-to-text desktop application

- Minimal – Does one thing really well – speech-to-text

- Private – Nothing leaves my machine, everything offline

- Universal – Should work with any Windows app

- Cross Platform – Good to have

I call it Vaani (वाणी), meaning “speech” or “voice” in Sanskrit.

GitHub

https://github.com/webstruck/vaani-speech-to-text

Installation

pip install vaani-speech-to-text

Demo

This article chronicles the journey of building Vaani. It’s a practical exploration of what vibe coding actually feels like – the exhilarating speed, the unexpected roadblocks, the moments of genuine insight, and the lessons learned when collaborating intensely with an AI. I decided to use Claude Sonnet 3.7, the best (again, based on general vibe) available coding assistant at that time. In the meantime, Google Gemini 2.5 Pro was released and I decided to use it as a code reviewer.

BTW, this article is largely dictated using Vaani 😊.

Let’s vibe.

The Setup

AI Developer: Claude Sonnet 3.7

AI Code Reviewer: Gemini 2.5 Pro Preview 03-25

The AI Developer and AI Code Reviewer always had complete code as context for each prompt. I initiated a fresh conversation once a certain goal is achieved e.g. a bug is fixed or a feature is implemented and working successfully. I did this to manage the context window and ensure the best AI performance. I did not use any agentic IDEs (e.g. Cursor, Windsurf, etc.) and instead relied on Claude Desktop and Google AI Studio to keep it pure. I also avoided any manual code changes with the intent to release the code open source for community scrutiny.

The Initial Spark: From Zero to Scaffolding in Seconds

So, where do we begin? Traditionally, this involves meticulous planning. For example, outlining components, designing interfaces, choosing libraries, and setting up the project structure. Instead, I decided to start with a lazy prompt as shown below.

I want to build a lightweight speech to text app in Python for Windows users. The idea is to help Windows users write things quickly using voice in any application e.g. word, powerpoint, browser etc. The app should work locally without the internet for privacy. Should activate using hot key or hot word.

And true to its reputation, Claude Sonnet 3.7 went completely berserk. It generated a comprehensive application structure almost instantly, complete with:

- System tray integration using

Tkinter - Global hotkey detection using

keyboard - Visual feedback indicator UI

- Settings persistence UI

- Speech to Text using

Vosk - Basic audio manager

- Main entry point

- Packaging using

pyinstaller

The initial phase perfectly captured the allure of vibe coding: bypassing hours of design and foundational coding, moving directly from the idea to a tangible (albeit buggy) application skeleton. The feeling was one of incredible acceleration and possibilities.

Riding the Waves: The Core Iteration Loop

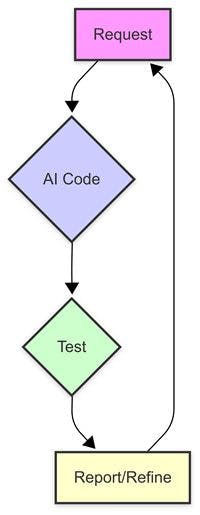

With the basic components in place, the real development began by settling into a distinct rhythm – the core loop of AI-assisted vibe coding:

- Feature Request / Bug Report: I’d describe a desired feature (“Let’s add hot word detection”) or report an issue (“The transcription results aren’t appearing!”). Often, bug reports included error logs or screenshots.

- AI Code Generation: The AI would process the request and generate code snippets, sometimes modifying existing functions, sometimes adding entirely new modules.

- Integration & Testing: I would integrate the AI’s code into the application and manually test the functionality. Does it work? Does it feel right?

- Feedback / Refinement: If it worked, we moved on. If not (which was frequent!), I’d go back to step 1, providing more specific error details or describing the undesired behavior.

This loop was incredibly fast, but also heavily reactive. We weren’t following a grand design; we were navigating by sight, fixing problems only after they surfaced. Key challenges emerged rapidly:

- Callback Conundrums: Initial attempts at connecting different parts of the application (like audio input to the transcription engine) simply failed to communicate correctly.

- Concurrency Complexities: Integrating background tasks (like continuous audio processing) with the UI led to `classic` concurrency issues – crashes or hangs related to accessing shared resources or updating the UI from the wrong thread. The AI proposed solutions involving queues and synchronization mechanisms, but it often took multiple attempts to get right.

- UI State Persistence: Getting the settings window to reliably save and load user preferences proved surprisingly difficult. Ensuring simple controls like checkboxes, sliders, and dropdowns correctly reflected and stored their state required significant back-and-forth.

- Continuous Speech Processing: When asked to build a continuous speech processing system, AI came up with an overwhelmingly sophisticated solution that included continuous capture, segment ordering, parallel processing, ordered insertion, context awareness, and so on. It worked in principle but was still buggy. Finally, a pushback and a little hint nudged it towards a simpler approach that worked reasonably well.

This phase highlighted the raw power of AI for iteration but also the potential chaos of debugging code generated by another entity, relying on the AI to fix its own mistakes based on your observations.

“Wait, Why Are We Doing This?” – Pivots and Necessary Reality Checks

While Claude demonstrated impressive coding capabilities, getting most things right in the first go, it wasn’t infallible. Particularly regarding architectural choices, several instances forced us to fundamentally rethink Claude’s suggestions. Claude seemed eager to over-engineer the solutions making it extremely complex in an attempt to make it generic. For example, when tackling the fragmented text output, Claude proposed a sophisticated data buffering class. It worked but felt overly complex. Once I questioned the need for this complexity, it conceded and we pivoted to much simpler direct implementation by detecting natural pauses. This is when I realized that developer intuition about simplicity and pragmatism is a valuable counterbalance to potential AI over-enthusiasm.

Another instance was when we implemented audio calibration and persisted (after Gemini rightly pointed out the efficiency issue) it in settings. Later, a practical thought emerged: “Won’t this calibration be specific to the microphone used?”. This real-world usage scenario revealed a bug missed during generation. Claude first suggested storing calibration settings per device, but obliged with a simpler solution: just recalibrate if the input device changes. Again, considering the practical usage context (most users are unlikely to switch input devices frequently), choosing to persist audio calibration for only 1 device made sense.

These moments underscore that effective vibe coding isn’t passive acceptance; it’s an active dialogue where the developer guides, questions, and sometimes corrects the AI’s trajectory.

Defining the “Vibe”: How Vaani Embodied This Approach

Reflecting on the Vaani development journey, it exhibited the core characteristics often associated with vibe coding:

- Minimal upfront specification: Started with a goal, not a detailed blueprint.

- AI as primary implementer: The AI wrote the vast majority of the initial code and subsequent features/fixes.

- Intuition-driven refinements: Changes were often driven by subjective testing (“the quality feels poor”, “the UI element should be movable”) rather than formal requirements.

- Emergent design: The application’s architecture and feature set evolved organically and reactively. For example, simplifying the core audio pipeline, and adding concurrency controls later or replacing Tkinter with PySide6 for (threading) simplification and a modern look.

- Debugging delegation: My role in fixing bugs was often to report symptoms accurately so the AI could generate the cure.

This aligns well with the current discussion defining vibe coding by its speed, reliance on natural language, and sometimes a lesser degree of developer scrutiny. However, my experience suggests it exists on the spectrum. While Vaani started near the “pure vibe” end, the project naturally shifted toward more structure (requesting modularization, and code reviews) as it matured and approached release.

Beyond the Hype: Novel Insights from the Trenches

Working so closely with the AI on a complete project yielded some insight that goes beyond the typical “AI is fast but makes mistakes” narrative.

- The AI has an over-engineering tendency: Repeatedly, the AI’s first solution was more complex than necessary (e.g. initial architecture, text buffering, configuration handling). It seems potentially biased towards comprehensive or generic solutions requiring the developer to actively filter for simplicity and context.

- The developer as an essential filter and validator: This wasn’t just passive coding. My role evolved into that of a critical validator, reality checker, and complexity filter. Questioning the AI why this architecture was as important as requesting new features. Effective vibe coding requires active human engagement.

- The inevitable shift towards structure: Pure vibe coding got the project off the ground incredibly fast. However, to make Vaani maintainable and release-ready (especially for open source) a conscious shift was necessary. Explicitly requesting modularization, code quality analysis, and refactoring became crucial in later stages. Vibe coding might be step one but traditional engineering principles are still needed for robust maintainable software.

- Implicit learning vs. Deep Understanding: I learned a lot by debugging the AI’s code. However, because the AI often provided fixes directly, I didn’t always need to achieve the deepest level of understanding of why certain complex issues occurred (like subtle race conditions or specific UI framework quirks). This highlights a potential trade-off between speed and depth of learning.

The Double-Edged Sword: Weighing the Pros and Cons

Pros:

- Blazing Speed: Prototyping and initial feature implementation are breathtakingly faster.

- Complexity Tackling: AI can generate code for complex tasks (integrating libraries, handling concurrency) quickly, lowering the barrier to entry.

- Boilerplate Buster: Tedious setup and repetitive code are handled automagically.

- Forced Learning (via Debugging): Fixing AI errors often forces understanding the problem domain, indirectly fostering learning.

Cons:

- High Risk of Subtle Bugs: Rapid generation and reactive debugging can easily miss edge cases, race conditions, or deeper logical flaws.

- Potential for Poor Architecture: The AI’s initial design choices might be suboptimal or overly complex if not critically evaluated by the developer.

- Difficult Debugging Cycles: Fixing code you didn’t write, especially when the AI struggles with the underlying issue (like complex state or concurrency), can be frustrating and time-consuming. Remember that feeling when you’re asked to fix someone else’s code?

- Maintainability Concerns: Organically grown, AI-generated code can become tangled and hard to understand without deliberate refactoring and structuring.

- Skill Erosion Potential: Over-reliance might hinder the development of fundamental design, debugging, architectural skills, and most importantly human intuition.

- Non-Functional Requirements Neglect: Security, performance, resource management, and comprehensive error handling can easily be overlooked in the rush for functionality.

Taming the Vibe: Recommendations for Effective Collaboration

Vibe coding is undoubtedly a powerful tool, but it requires skill to wield effectively. If you are an experienced developer and considering this approach, here are my generic recommendations based on my experience of building Vaani.

- Validate, Don’t Just Accept: Treat AI code as a draft. Question its architectural choices (“Why this pattern? Is it appropriate here?”). Ask for alternatives. Test thoroughly beyond the “happy path.”

- Act as a Complexity Filter: If an AI solution seems overly complex or uses obscure patterns without good reason, push back. Ask for simpler, more standard approaches. Prioritize simplicity and maintainability.

- Plan for Structure: Recognize that the initial vibe-coded prototype will likely need refinement. Budget time for refactoring – improving modularity, adding clear documentation (comments, READMEs), and enhancing code quality before considering a project stable or release-ready.

- Focus on Understanding: Don’t just copy-paste AI code. Use the AI as a tutor. When it provides a fix or a complex piece of code, ask it to explain the reasoning behind it. Understanding the “why” is critical.

- Leverage Established Tooling and Practices: As AI accelerates iteration, maintaining software quality becomes even more critical. Embrace automated testing early—unit and integration tests provide essential safety nets against regressions. Pair this with code quality and static analysis tools (like linters and analyzers) to catch bugs, style issues, and anti-patterns that may slip past both humans and AI.

Conclusion: Vibe Coding – A Powerful Partner, Not a Replacement

My journey building Vaani confirmed that AI-assisted “vibe coding” is more than just hype. It fundamentally changes the development workflow, offering unprecedented speed in translating ideas into functional code. It allowed me, a single developer, to build a reasonably complex application in a fraction of the time (~ 15 hours) it might have traditionally taken.

However, it’s not a magic wand. It’s a collaboration requiring communication, critical thinking, and oversight. The AI acts like an incredibly fast, knowledgeable, but sometimes over-enthusiastic assistant. It can generate intricate logic in seconds but might miss the simplest solution or overlook real-world constraints or practicability. It can fix bugs instantly but might struggle with the nuances.

The real power emerges when the developer actively engages – guiding the AI, questioning its assumptions, validating its output, and applying fundamental software engineering principles. Vibe coding doesn’t replace developer skills; it shifts it towards architecture, validation, effective prompting, and critical integration. It’s an exciting, powerful, and sometimes challenging new way to build, offering a glimpse into a future where human creativity and artificial intelligence work hand-in-hand, guided by sound engineering judgment.