Table of Links

-

Introduction

-

Related Work

2.1 Semantic Typographic Logo Design

2.2 Generative Model for Computational Design

2.3 Graphic Design Authoring Tool

-

Formative Study

3.1 General Workflow and Challenges

3.2 Concerns in Generative Model Involvement

3.3 Design Space of Semantic Typography Work

-

Design Consideration

-

Typedance and 5.1 Ideation

5.2 Selection

5.3 Generation

5.4 Evaluation

5.5 Iteration

-

Interface Walkthrough and 6.1 Pre-generation stage

6.2 Generation stage

6.3 Post-generation stage

-

Evaluation and 7.1 Baseline Comparison

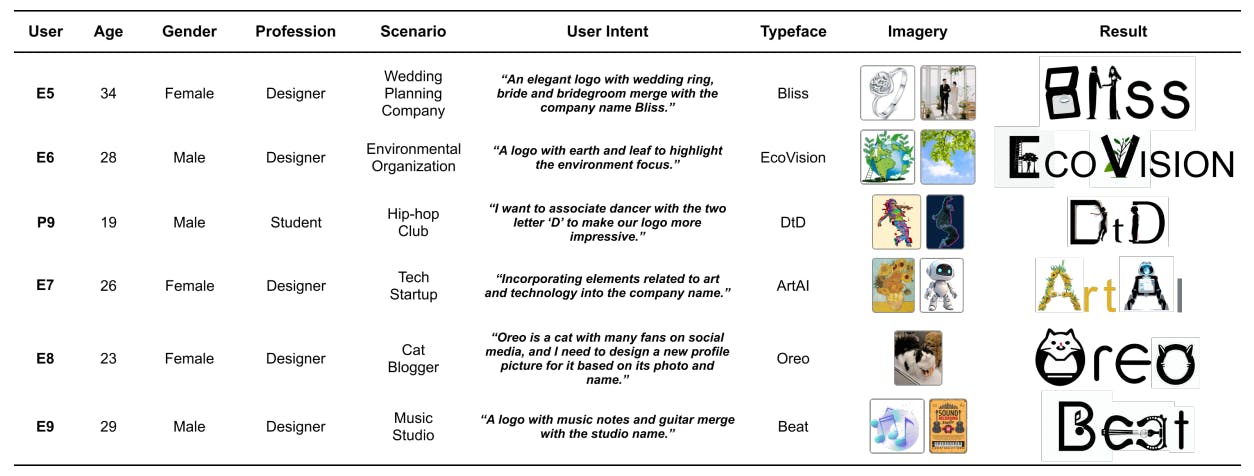

7.2 User Study

7.3 Results Analysis

7.4 Limitation

-

Discussion

8.1 Personalized Design: Intent-aware Collaboration with AI

8.2 Incorporating Design Knowledge into Creativity Support Tools

8.3 Mix-User Oriented Design Workflow

-

Conclusion and References

8.3 Mix-User Oriented Design Workflow

With the aim of developing a tool with a “low threshold” for novices to steer the generation and a “high ceiling” for experts to achieve more advanced effects, TypeDance integrates a simulatable design workflow. Creativity support tools are inherently designed to provide a comprehensive authoring experience, addressing both common and unique emphases from diverse users [57, 58, 65]. As illustrated in Figure 10, experts and designers share both similar and distinct preferences. Functions with shared preferences, like selection and generation, can be considered central to the workflow and warrant deeper investigation. Notably, there are functions with varying preferences based on expertise. Experts tend to prioritize evaluation and refinement, whereas novices may view these as optional. However, with less design background, novices find ideation helpful than experts. Functions with differing preferences act as a “wide wall,” accommodating optional user requirements. While not mandatory like central functions, omitting them compromises the overall integrity of the workflow.

9 CONCLUSION

This study distills design knowledge from real-world examples, summarizes generalizable design patterns and simulatable design workflow, and explores the creation of semantic typographic logos by blending typeface and imagery while maintaining legibility. We introduce TypeDance, an authoring tool based on a generative model that supports a personalized design workflow including ideation, selection, generation, evaluation, and iteration. With TypeDance, creators can flexibly choose typefaces at different levels of granularity and blend them with specific imagery using combinable design factors. TypeDance also allows users to adjust the generated results along the typeface-imagery spectrum and offers post-editing for individual elements. Feedback from general users and experts validates the effectiveness of TypeDance and provides valuable insights for future opportunities. We are excited to enhance the functionality of TypeDance for a comprehensive workflow and explore new techniques and interactions to enhance human creativity.

REFERENCES

[1] Saleema Amershi, Dan Weld, Mihaela Vorvoreanu, Adam Fourney, Besmira Nushi, Penny Collisson, Jina Suh, Shamsi Iqbal, Paul N Bennett, Kori Inkpen, et al. 2019. Guidelines for human-AI interaction. In Proceedings of the 2019 chi conference on human factors in computing systems. 1–13.

[2] Apple. 2021. Human Interface Guidelines. https://developer.apple.com/design/human-interface-guidelines/, Last accessed on 2023-12-13.

[3] Gregory Ashworth and Mihalis Kavaratzis. 2009. Beyond the logo: Brand management for cities. Journal of Brand Management 16 (2009), 520–531.

[4] Daniel Berio, Frederic Fol Leymarie, Paul Asente, and Jose Echevarria. 2022. Strokestyles: Stroke-based segmentation and stylization of fonts. ACM Transactions on Graphics 41, 3, Article 28 (2022), 21 pages.

[5] James Betker, Gabriel Goh, Li Jing, Tim Brooks, Jianfeng Wang, Linjie Li, Long Ouyang, Juntang Zhuang, Joyce Lee, Yufei Guo, et al. 2023. Improving image generation with better captions. Computer Science. https://cdn. openai. com/papers/dall-e-3. pdf (2023).

[6] Yining Cao, Jane L E, Zhutian Chen, and Haijun Xia. 2023. DataParticles: Block-based and language-oriented authoring of animated unit visualizations. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–15.

[7] Terry L Childers and Jeffrey Jass. 2002. All dressed up with something to say: Effects of typeface semantic associations on brand perceptions and consumer memory. Journal of Consumer Psychology 12, 2 (2002), 93–106.

[8] Lydia B Chilton, Ecenaz Jen Ozmen, Sam H Ross, and Vivian Liu. 2021. VisiFit: Structuring iterative improvement for novice designers. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–14.

[9] Lydia B Chilton, Savvas Petridis, and Maneesh Agrawala. 2019. VisiBlends: A flexible workflow for visual blends. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–14.

[10] John Joon Young Chung and Eytan Adar. 2023. PromptPaint: Steering Text-to-Image Generation Through Paint Medium-like Interactions. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology. 1–17.

[11] John Joon Young Chung, Wooseok Kim, Kang Min Yoo, Hwaran Lee, Eytan Adar, and Minsuk Chang. 2022. TaleBrush: Sketching stories with generative pretrained language models. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–19.

[12] Weiwei Cui, Xiaoyu Zhang, Yun Wang, He Huang, Bei Chen, Lei Fang, Haidong Zhang, Jian-Guan Lou, and Dongmei Zhang. 2019. Text-to-viz: Automatic generation of infographics from proportion-related natural language statements. IEEE Transactions on Visualization and Computer Graphics 26, 1 (2019), 906–916.

[13] João M Cunha, Nuno Lourenço, Pedro Martins, and Penousal Machado. 2020. Visual blending for concept representation: A case study on emoji generation. New Generation Computing 38, 4 (2020), 739–771.

[14] Laura Devendorf and Kimiko Ryokai. 2013. AnyType: provoking reflection and exploration with aesthetic interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 1041–1050.

[15] Ryan Dew, Asim Ansari, and Olivier Toubia. 2022. Letting logos speak: Leveraging multiview representation learning for data-driven branding and logo design. Marketing Science 41, 2 (2022), 401–425.

[16] Evelyn Fix and Joseph Lawson Hodges. 1989. Discriminatory analysis. Nonparametric discrimination: Consistency properties. International Statistical Review/Revue Internationale de Statistique 57, 3 (1989), 238–247.

[17] Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H. Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion. arXiv:2208.01618

[18] Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. StyleGAN-NADA: CLIP-guided domain adaptation of image generators. ACM Transactions on Graphics 41, 4, Article 141 (2022), 13 pages.

[19] Google. 2019. People + AI Guidebook. https://pair.withgoogle.com/, Last accessed on 2023-12-13.

[20] Pamela W Henderson and Joseph A Cote. 1998. Guidelines for selecting or modifying logos. Journal of Marketing 62, 2 (1998), 14–30.

[21] Yon Ade Lose Hermanto. 2023. Semantic Interpretation in Experimental Typography Creation. KnE Social Sciences 8, 15 (2023), 252–257.

[22] Kai-Wen Hsiao, Yong-Liang Yang, Yung-Chih Chiu, Min-Chun Hu, Chih-Yuan Yao, and Hung-Kuo Chu. 2023. Img2Logo: Generating Golden Ratio Logos from Images. In Computer Graphics Forum, Vol. 42. Wiley Online Library, 37–49.

[23] Shir Iluz, Yael Vinker, Amir Hertz, Daniel Berio, Daniel Cohen-Or, and Ariel Shamir. 2023. Word-As-Image for Semantic Typography. ACM Transactions on Graphics 42, 4, Article 151 (2023), 11 pages.

[24] Youwen Kang, Zhida Sun, Sitong Wang, Zeyu Huang, Ziming Wu, and Xiaojuan Ma. 2021. MetaMap: Supporting visual metaphor ideation through multi-dimensional example-based exploration. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, Article 427, 15 pages.

[25] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Dollár, and Ross Girshick. 2023. Segment Anything. arXiv:2304.02643

[26] Janin Koch, Andrés Lucero, Lena Hegemann, and Antti Oulasvirta. 2019. May AI? Design ideation with cooperative contextual bandits. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–12.

[27] Yuki Koyama, Daisuke Sakamoto, and Takeo Igarashi. 2014. Crowd-powered parameter analysis for visual design exploration. In Proceedings of the 27th annual ACM symposium on User interface software and technology. 65–74.

[28] Jieun Lee, Eunju Ko, and Carol M Megehee. 2015. Social benefits of brand logos in presentation of self in cross and same gender influence contexts. Journal of Business Research 68, 6 (2015), 1341–1349.

[29] Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, Vol. 162. PMLR, 12888–12900.

[30] Yi-Na Li, Kang Zhang, and Dong-Jin Li. 2017. Rule-based automatic generation of logo designs. Leonardo 50, 2 (2017), 177–181.

[31] Giorgia Lupi and Stefanie Posavec. 2018. Observe, collect, draw!: A visual journal: Discover the patterns in your everyday life. Princeton Architectural Press.

[32] O Mataev and H Mataev. 2006. Olga’s gallery. giuseppe Arcimboldo. [33] Mark Meyer, Alan Barr, Haeyoung Lee, and Mathieu Desbrun. 2002. Generalized barycentric coordinates on irregular polygons. Journal of Graphics Tools 1 (2002), 13–22.

[34] Chong Mou, Xintao Wang, Liangbin Xie, Jian Zhang, Zhongang Qi, Ying Shan, and Xiaohu Qie. 2023. T2i-adapter: Learning adapters to dig out more controllable ability for text-to-image diffusion models. arXiv:2302.08453

[35] Takeshi Okada and Kentaro Ishibashi. 2017. Imitation, inspiration, and creation: Cognitive process of creative drawing by copying others’ artworks. Cognitive Science 41, 7 (2017), 1804–1837.

[36] Long Ouyang, Jeffrey Wu, Xu Jiang, Diogo Almeida, Carroll Wainwright, Pamela Mishkin, Chong Zhang, Sandhini Agarwal, Katarina Slama, Alex Ray, et al. 2022. Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems 35 (2022), 27730–27744.

[37] Helen Petrie, Fraser Hamilton, and Neil King. 2004. Tension, what tension? Website accessibility and visual design. In Proceedings of the International Cross-Disciplinary Workshop on Web Accessibility, Vol. 63. Association for Computing Machinery, 13–18.

[38] Huy Quoc Phan, Hongbo Fu, and Antoni B Chan. 2015. Flexyfont: Learning transferring rules for flexible typeface synthesis. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 245–256.

[39] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Vol. 139. PMLR, 8748–8763.

[40] Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv:2204.06125

[41] Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-shot text-to-image generation. In Proceedings of the International Conference on Machine Learning, Vol. 139. PMLR, 8821–8831.

[42] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 10684–10695.

[43] Subhadip Roy and Rekha Attri. 2022. Physimorphic vs. Typographic logos in destination marketing: Integrating destination familiarity and consumer characteristics. Tourism Management 92 (2022), 104544.

[44] Patsorn Sangkloy, Wittawat Jitkrittum, Diyi Yang, and James Hays. 2022. A sketch is worth a thousand words: Image retrieval with text and sketch. In Proceedings of the European Conference on Computer Vision. Springer, 251–267.

[45] Yang Shi, Pei Liu, Siji Chen, Mengdi Sun, and Nan Cao. 2022. Supporting expressive and faithful pictorial visualization design with visual style transfer. IEEE Transactions on Visualization and Computer Graphics 29, 1 (2022), 236–246.

[46] Ben Shneiderman. 2007. Creativity support tools: accelerating discovery and innovation. Commun. ACM 50, 12 (2007), 20–32.

[47] Maham Tanveer, Yizhi Wang, Ali Mahdavi-Amiri, and Hao Zhang. 2023. DS-Fusion: Artistic Typography via Discriminated and Stylized Diffusion. In Proceedings of the International Conference on Computer Vision. IEEE.

[48] Purva Tendulkar, Kalpesh Krishna, Ramprasaath R Selvaraju, and Devi Parikh. 2019. Trick or TReAT: Thematic reinforcement for artistic typography. arXiv:1903.07820

[49] Dimitri von Rütte, Elisabetta Fedele, Jonathan Thomm, and Lukas Wolf. 2023. FABRIC: Personalizing Diffusion Models with Iterative Feedback. arXiv:2307.10159

[50] Yizhi Wang, Yue Gao, and Zhouhui Lian. 2020. Attribute2font: Creating fonts you want from attributes. ACM Transactions on Graphics 39, 4, Article 69 (2020), 15 pages. [

51] Yun Wang, Zhitao Hou, Leixian Shen, Tongshuang Wu, Jiaqi Wang, He Huang, Haidong Zhang, and Dongmei Zhang. 2022. Towards natural language-based visualization authoring. IEEE Transactions on Visualization and Computer Graphics 29, 1 (2022), 1222–1232.

[52] Yizhi Wang, Guo Pu, Wenhan Luo, Yexin Wang, Pengfei Xiong, Hongwen Kang, and Zhouhui Lian. 2022. Aesthetic text logo synthesis via content-aware layout inferring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 2436–2445.

[53] Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems 35 (2022), 24824–24837.

[54] Shishi Xiao, Suizi Huang, Yue Lin, Yilin Ye, and Wei Zeng. 2023. Let the Chart Spark: Embedding Semantic Context into Chart with Text-to-Image Generative Model. arXiv:2304.14630

[55] Jie Xu and Craig S Kaplan. 2007. Calligraphic packing. In Proceedings of Graphics Interface. Association for Computing Machinery, 43–50.

[56] Xiaotong Xu, Rosaleen Xiong, Boyang Wang, David Min, and Steven P Dow. 2021. Ideaterelate: An examples gallery that helps creators explore ideas in relation to their own. Proceedings of the ACM on Human-Computer Interaction 5, CSCW2, Article 352 (2021), 18 pages.

[57] Chuan Yan, John Joon Young Chung, Yoon Kiheon, Yotam Gingold, Eytan Adar, and Sungsoo Ray Hong. 2022. FlatMagic: Improving flat colorization through AI-driven design for digital comic professionals. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–17.

[58] Zihan Yan, Chunxu Yang, Qihao Liang, and Xiang’Anthony’ Chen. 2023. XCreation: A Graph-based Crossmodal Generative Creativity Support Tool. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology. 1–15.

[59] Shuai Yang, Jiaying Liu, Zhouhui Lian, and Zongming Guo. 2017. Awesome typography: Statistics-based text effects transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 7464–7473.

[60] Enhao Zhang and Nikola Banovic. 2021. Method for exploring generative adversarial networks (gans) via automatically generated image galleries. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 1–15.

[61] Junsong Zhang, Yu Wang, Weiyi Xiao, and Zhenshan Luo. 2017. Synthesizing ornamental typefaces. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 64–75.

[62] Jiayi Eris Zhang, Nicole Sultanum, Anastasia Bezerianos, and Fanny Chevalier. 2020. DataQuilt: Extracting visual elements from images to craft pictorial visualizations. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–13.

[63] Lvmin Zhang and Maneesh Agrawala. 2023. Adding conditional control to text-to-image diffusion models. arXiv:2302.05543

[64] Nanxuan Zhao, Nam Wook Kim, Laura Mariah Herman, Hanspeter Pfister, Rynson WH Lau, Jose Echevarria, and Zoya Bylinskii. 2020. Iconate: Automatic compound icon generation and ideation. In Proceedings of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, 1–13.

[65] Tongyu Zhou, Connie Liu, Joshua Kong Yang, and Jeff Huang. 2023. filtered. ink: Creating Dynamic Illustrations with SVG Filters. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–15.

[66] Changqing Zou, Junjie Cao, Warunika Ranaweera, Ibraheem Alhashim, Ping Tan, Alla Sheffer, and Hao Zhang. 2016. Legible compact calligrams. ACM Transactions on Graphics 35, 4, Article 122 (2016), 12 pages.

Authors:

(1) SHISHI XIAO, The Hong Kong University of Science and Technology (Guangzhou), China;

(2) LIANGWEI WANG, The Hong Kong University of Science and Technology (Guangzhou), China;

(3) XIAOJUAN MA, The Hong Kong University of Science and Technology, China;

(4) WEI ZENG, The Hong Kong University of Science and Technology (Guangzhou), China.

This paper is available on arxiv under ATTRIBUTION-NONCOMMERCIAL-SHAREALIKE 4.0 INTERNATIONAL license.