What Do Developers Ask ChatGPT the Most?

Table Of Links

Abstract

1 Introduction

2 Data Collection

3 RQ1: What types of software engineering inquiries do developers present to ChatGPT in the initial prompt?

4 RQ2: How do developers present their inquiries to ChatGPT in multi-turn conversations?

5 RQ3: What are the characteristics of the sharing behavior?

6 Discussions

7 Threats to Validity

8 Related Work

9 Conclusion and Future Work

References

\

\

RQ1: What types of software engineering inquiries do developers present to ChatGPT in the initial prompt?

Motivation: The objective of RQ1 is to characterize types of software engineering-related inquiries that are presented by developers in their shared conversations in GitHub issues and pull requests, particularly focusing on the initial prompts of each conversation. This insight about the presented inquiries in shared conversations is crucial for several reasons.

\

Firstly, the taxonomy provides a structured framework to categorize and understand the diverse range of software engineering inquiries developers raise to ChatGPT.

Secondly, by analyzing the frequency of these inquiries, we can uncover common needs among developers when utilizing ChatGPT in collaborative software development. This insight can inform the future improvement of FM-powered tools, including ChatGPT, and the establishment of benchmarks for evaluating the effectiveness of such tools in collaborative software development.

\

Finally, comparing inquiries across pull requests and issues allows for a comprehensive examination of the different contexts in which these inquiries are encountered. We chose to focus on the initial prompt of each conversation in RQ1 due to two main concerns: 1) The majority of the conversations (66.8% of DevGPTPRs and 63.1% of DevGPT-Issues) consist of single-turn interactions, wherein the initial prompt encompasses the sole task presented to ChatGPT. 2) Upon examination of multi-turn conversations, we observed that subsequent prompts never introduce new types of software engineering inquiries beyond those presented in the initial prompts.

\

3.1 Approach

We use open coding to manually categorize the initial prompts of all conversations in DevGPT-PRs and DevGPT-Issues. During labeling, we further removed non-English prompts that were not detected by data preprocessing. Specifically, 2 and 5 prompts from DevGPT-PRs and DevGPT-Issues were removed, respectively. Thus, our taxonomy was developed based on 210 prompts from DevGPT-PRs and 370 prompts from DevGPT-Issues. Our labeling was conducted over three rounds:

\

– In the first round, 50 randomly selected prompts from each dataset (DevGPTPRs and DevGPT-Issues) are selected and categorized by five co-authors independently, i.e., 100 prompts in total. After a comprehensive discussion, the team formulated a coding book with 16 labels, excluding others.

– In the second round, another set of 100 prompts was individually annotated by two co-authors based on the coding book established in the first round, with an equal split sourced from both DevGPT-PRs and DevGPT-Issues. The two annotators achieved an inter-rater agreement score of 0.77, as measured by Cohen’s kappa coefficient, representing a substantial agreement between the two annotators (Landis and Koch, 1977). They then discussed to resolve disagreement and further refined the coding book.

– In the final round, the two annotators who participated in the second round continued and independently labeled the remaining prompts based on the coding book.

\

3.2 Results

Table 1 presents the derived taxonomy from analyzing the 580 initial prompts in shared conversations across the two datasets. The results show a significant focus in the initial prompts on specific software engineering (SE) inquiries, with 198 of the initial prompts in DevGPT-PRs and 329 in DevGPT-Issues presenting such inquiries. The remainder, labeled as Others, comprises 12 prompts in DevGPT-PRs and 41 prompts in DevGPT-Issues, featuring either ambiguous content or information not directly related to SE inquiries, for example, “Explain Advancing Research Communication – the role of Humanities in the Digital Era”.

In both datasets, the five most prevalent SE-related inquiries identified were Code Generation, Conceptual, How-to, Issue Resolving, and Review, accounting for 72% and 81% of SE-related prompts in DevGPT-PRs and DevGPT-Issues, respectively. A notable distinction between the two sources is the higher occurrence of prompts in DevGPT-PRs seeking help with Review, Comprehension, Human language translation and Documentation. Conversely, DevGPT-Issues tend to feature more requests related to Data generation, Data formatting, and Mathematical problem resolving. Below, we describe each SE-related category in more detail.

\

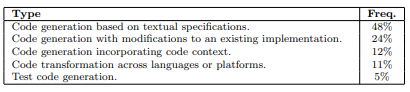

==(C1) Code Generation:== This category shows the highest number of prompts in both resources, accounting for 20% in DevGPT-PRs and 27% in DevGPTIssues, respectively. In prompts labeled as this category, developers request ChatGPT to generate code snippets based on a provided description. A followup analysis of these requests revealed five distinct types of requirements for code generation. As shown in Table 2, the majority of requests (48%) involve developers specifying their needs in textual form. However, it’s common for developers to supply existing code, either as context for generation or as a basis for adaptation. The requirements range from code generation to more specific inquiries, such as code transformation across different programming languages or platforms and generating test code.

\

==(C2) Conceptual:== In 18% of the initial prompts in DevGPT-PRs and 14% in DevGPT-Issues, developers engage ChatGPT to seek knowledge and clar-

ification about theoretical concepts, principles, practices, tools, or high-level implementation ideas. This exploration spans questions on technical specifics, such as the memory usage limits of WebAssembly (WASM) in Chrome, to practical implementation queries, like the feasibility of developing a Redis-like cache using SQLite with time-to-live (TTL) functionality. These examples underscore ChatGPT’s role not only as a direct coding assistant but also as a conceptual guide aiding developers in understanding and applying complex technical knowledge with which they are not familiar.

\

==(C3) How-to:== We observe that 13% of initial prompts in DevGPT-PRs and a higher percentage, 22%, in DevGPT-Issues, are from developers seeking stepby-step instructions to accomplish specific SE-related goals. For instance, “I am doing… How can I achieve this?”, and “How to make an iOS framework M1 compatible?”. Such examples illustrate ChatGPT’s role in offering insights and starting points for tackling SE challenges. The higher occurrence of “How-to” prompts in DevGPT-Issues compared to DevGPT-PRs suggests that developers might turn to ChatGPT more frequently when they are in the initial stages of problem-solving, possibly lacking a concrete solution.

\

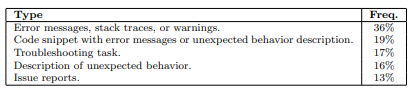

==(C4) Issue resolving:== In 12% of the initial prompts in DevGPT-PRs and 14% in DevGPT-Issues, developers seek assistance to resolve SE-related issues. Further examination of these prompts identified five types of information developers provide when seeking help with issues, as detailed in Table 3. The distribution of information types shows developers’ diverse strategies to present their challenges in the prompt. The most popular method (36%), sharing error messages or traces, indicates developers’ preference for direct feedback on specific errors.

\

Combining code snippets with a detailed description of the error or unexpected behavior (19%) is more common than merely verbally describing the issues (16%). Another common type (17%) is prompts seeking assistance for troubleshooting tasks unrelated to code development, such as installing a library or setting up a system. Interestingly, developers occasionally (13%) include external links in their initial prompts, expecting ChatGPT to access these links, review the associated issues, and offer suggestions for resolution.

\

==(C5) Review:== In DevGPT-PRs, 9% of the initial prompts involve requests for ChatGPT to offer suggestions for code improvement or to compare different implementations or design choices. This frequency is notably higher than in DevGPT-Issues, where 4% of the initial prompts reflect similar requests. This distribution is expected, as developers tend to prioritize code quality and seek external validation or suggestions for enhancement in the context of PRs. Con-

versely, in issues, the primary focus is on identifying, discussing, and exploring potential solutions to existing problems.

\

==(C6) Comprehension:== In DevGPT-PRs, 7% of the initial prompts seek ChatGPT’s assistance in understanding the behavior of code snippets or software artifacts, exceeding the 3% observed in DevGPT-Issues. These prompts typically begin with phrases like “Explain this code”. The higher prevalence in PRs suggests a focused interest in clarifying code behavior as part of the review and integration process.

\

==(C7) Human language translation:== Notably, ChatGPT’s capability in human language translation, while not specific to SE, is utilized by developers in 6% of the conversations in DevGPT-PRs, but not in DevGPT-Issues. This category encompasses requests for translating text relevant to software development from one language to another. Examples include UI components, code strings, technical documentation, and project descriptions.

\

==(C8) Documentation:== Within DevGPT-PRs and DevGPT-Issues, 5% and 2% of the initial prompts from each dataset request ChatGPT for assistance with creating, reviewing, enhancing, or refining technical documentation. This category spans a wide array of documentation forms and encompasses project descriptions, issue reports, the content of pull requests, technical communications, and markdown files, among others. The requests indicate developers’ recognition of ChatGPT’s capability to contribute to technical documentation’s clarity, accuracy, and effectiveness, reinforcing the importance of wellcrafted documentation in software development.

\

==(C9) Information giving:== In DevGPT-PRs and DevGPT-Issues, 4% and 2% of initial prompts, respectively, diverge from directly requesting assistance with a specific inquiry. Instead, these prompts offer contextual information relevant to an SE-related inquiry presented in subsequent prompts. This contextual information can be a sharing of code snippets or an explanation of the developer’s current project or the intended role of the ChatGPT. This approach, found in multi-turn conversations, unveils a different prompting strategy developers employ – putting background information as the initial and isolated prompt before presenting the inquiry.

\

==(C10) Data generation:== In 2% and 4% of the initial prompts in DevGPTPRs and DevGPT-Issues, developers ask ChatGPT to create various types of data, including test inputs, search queries, icons, example of API specification, and names for websites.

\

==(C11) Data formatting:== Besides using ChatGPT to generate data, in 1% and 3% of initial prompts in DevGPT-PRs and DevGPT-Issues, developers ask ChatGPT to transform a given data into a different format. For instance, “You are a service that translates user requests into JSON objects of type ”Plan” according to the following TypeScript definitions…”.

\

==(C12) Mathematical problem solving:== Developers turn to ChatGPT for assistance with mathematical problems in 1% of the initial prompts in DevGPTPRs and 3% in DevGPT-Issues. These inquiries often aim to deepen one’s understanding of algorithms that underpin programming tasks or to create test cases for software validation.

\

==(C13) Verifying capability (of ChatGPT):== We encountered two and one initial prompts from DevGPT-PRs and DevGPT-Issues where one of the developers ask “are you familiar with typedb? ”. In this case, the developer assessed ChatGPT’s capabilities to determine the scope of assistance it can provide for their SE-related inquiries – an establishment of trust and reliability in FMpowered tools for software development.

\

==(C14) Prompt engineering:== We find one initial prompt in DevGPT-PRs where the developer presents an initial prompt for a SE-related inquire and asks ChatGPT to provide a better prompt. This instance presents a unique prompt engineering strategy, using ChatGPT as a tool to refine the art of prompting itself.

\

==(C15) Execution:== Exclusively in DevGPT-Issues, 4 of the initial prompts request ChatGPT to execute a specific task, which includes running an experiment or executing code. Examples of such requests are, “Try running that against this function and show me the result” and “Benchmark that for me and plot a chart”. These prompts indicate that developers believe that ChatGPT is capable of undertaking practical execution tasks, which is rarely examined in the literature.

\

==(C16) Data analysis:== Beside execution inquiries, developers also ask ChatGPT to perform data analysis tasks by providing a CSV file. We find two such cases in DevGPT-Issues. Such queries may be used to plan data preprocessing or data science projects. For instance, “find all the entries that are present in the left and in the right column.”.

:::info

Authors

- Huizi Hao

- Kazi Amit Hasan

- Hong Qin

- Marcos Macedo

- Yuan Tian

- Steven H. H. Ding

- Ahmed E. Hassan

:::

:::info

This paper is available on arxiv under CC BY-NC-SA 4.0 license.

:::

\