Chalkboards & Code—Remixing the Digital Classroom

Authors:

(1) Florian Gnadlinger, Faculty of Computer Science, Communication, and Economics, University of Applied Sciences Berlin, Germany;

(2) Simone Kriglstein, Faculty of Informatics, Masaryk University, Czech Republic.

Table of Links

Abstract and 1 Introduction

2 Background

3 Methode & Results

4 Discussion

5 Conclusion and References

Abstract: This contribution draws attention to implications connected with meta-architectural design decisions for intelligent tutoring systems in the context of formative assessments. As a first result of addressing this issue, this contribution presents a meta-architectural system design that includes the role of educators.

1 INTRODUCTION

According to an ongoing systematic literature review and similar reviews that have already been conducted [1–3], we see evidence that intelligent tutoring systems are developed from a conceptual perspective in a black-box manner for the actual participants of educational assessment scenarios.

This emphasizes the necessity to examine system designs, employed algorithms, and the conceptual implementation of pedagogical-psychological models in intelligent tutoring systems to enhance explainability and transparency for all stakeholders. A first step to do so is to reflect on intelligent tutoring systems beyond their current borders and to question:

RQ1: How can we incorporate the role of educators into meta-architectural designs of intelligent tutoring systems to foster their explainability and transparency?

2 BACKGROUND

Competency-based learning (or competency-based education and related synonyms as discussed in [4]) refers to a pedagogical approach that supports the development of practical skills and behaviors that are necessary for success in real-world situations [5, 6]. A revised definition of competency-based learning distinguishes this concept into seven major aspects [6, 7]. Two of these aspects address the assessment of competencies: “…(2) assessment is meaningful, timely, relevant, and actionable evidence; … (4) students’ assessment is based on evidence of mastery; [6, p.1904]”(retrieved from Levine & Patrick [7]).

Formative assessments are a major form of assessing competencies [8–11]. Usually, these kinds of assessments are typically incremental [9, 10, 12] and inform not only learners about their current state but also educators about the effectiveness of their pedagogical and didactical methods [11]. To design, develop, and implement formative assessments, various methodologies have been developed to derive these information for educators, for example, the ADDIE Model [13], Four – Component Instructional Design (4C/ID) [14] or the Evidence Centered-Design Framework (ECD) [15].

The ECD is based on the idea that when learners fulfill tasks, they create some kind of result (work product) that incorporates, to some degree, the learner’s competence level [16]. Hence, extracting evidence from the work products within a defined evidence identification process leads to claims about certain competencies [15, 17, 18]. Collecting and storing these beliefs result in an individual description of each learner, a learner model. Thus, before constructing the actual assessment, it is necessary to reflect on all those stated parts and other aspects as well [15].

Educators usually act in a similar (but far less formal) way to develop and apply formative assessments in their educational settings, which costs them a lot of time. A recent McKinsey study ([19]) with a focus on K-12 teachers in the US, UK, CA, and SG reveals that they spend 34% of their time on preparation, evaluation, and feedback tasks [19, 20].

Hence, researchers and developers have been eager to tailor computer-based learning experiences to the learner´s needs by using evidence about competencies to adapt the learning process autonomously [21– 23]. To achieve this, such systems incorporate learner models, task (or more general domain) models, and automated evidence identification processes and use those to generate adaption recommendations. Examples of conceptional formulations for such systems could be recommending the next best-fitting learning object (e.g., a task with a specific difficulty), finding a sequence of novel courses, or supporting the search for learning peers [24, 25].

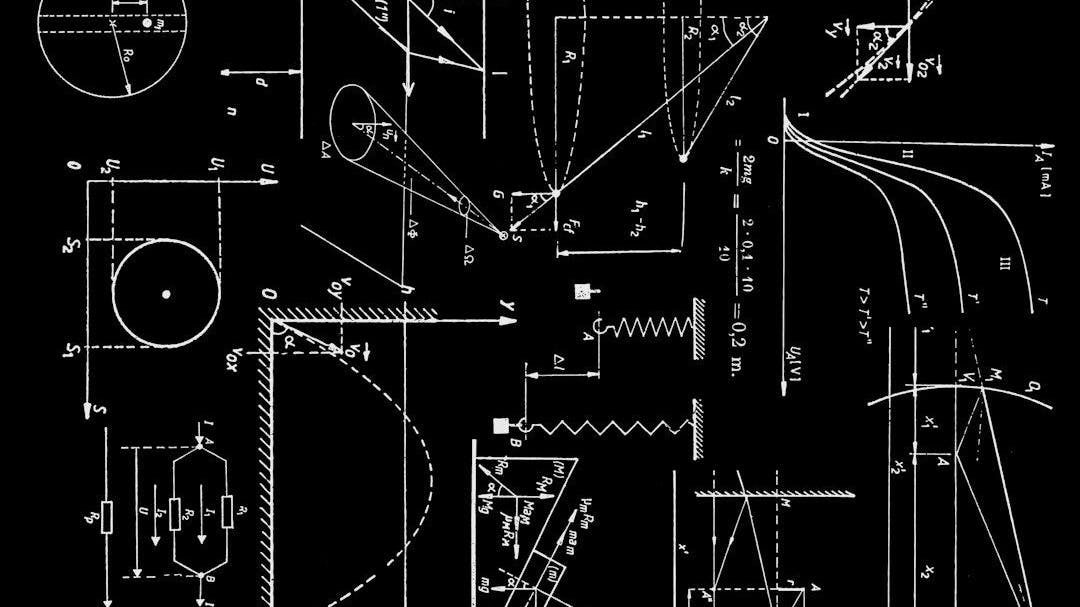

Nowadays, advanced systems are able to mimic the behaviors of human tutors by using artificial intelligence, which results in their classification as intelligent tutoring systems [26, 27]. To do so, these systems not only incorporate learner models and domain models but also so-called tutor models. The tutor model is the decision entity that schedules pedagogical or didactical interventions according to the state of the learner model as a response to the learner’s interaction [28]. As a result of this perspective, intelligent tutoring systems are designed and developed according to the meta-architectural approach illustrated by the gray elements in Figure 1 (see [26, 28–30]).