ML Tool Spots 80% of Vulnerability-Inducing Commits Ahead of Time

Table Of Links

ABSTRACT

I. INTRODUCTION

II. BACKGROUND

III. DESIGN

- DEFINITIONS

- DESIGN GOALS

- FRAMEWORK

- EXTENSIONS

IV. MODELING

- CLASSIFIERS

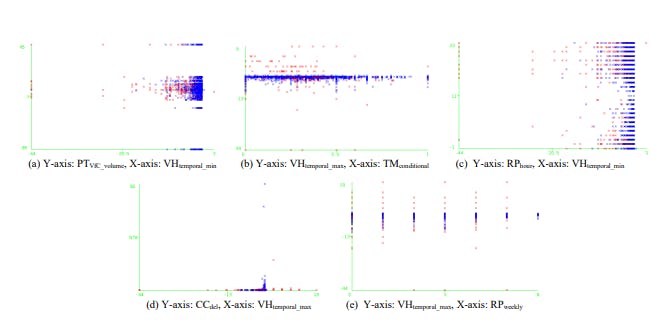

- FEATURES

V. DATA COLLECTION

VI. CHARACTERIZATION

- VULNERABILITY FIXING LATENCY

- ANALYSIS OF VULNERABILITY FIXING CHANGES

- ANALYSIS OF VULNERABILITY-INDUCING CHANGES

VII. RESULT

- N-FOLD VALIDATION

- EVALUATION USING ONLINE DEPLOYMENT MODE

VIII. DISCUSSION

- IMPLICATIONS ON MULTI-PROJECTS

- IMPLICATIONS ON ANDROID SECURITY WORKS

- THREATS TO VALIDITY

- ALTERNATIVE APPROACHES

IX. RELATED WORK

CONCLUSION AND REFERENCES

\

\

X. CONCLUSION

This paper presented a practical, preemptive security testing approach that is based on an accurate, online prediction of likely-vulnerable code changes at the pre-submit time. We presented the three types of new feature data that are effective in vulnerability prediction and evaluated their recall and precision via N-fold validation using the data from the large and important Android open source project.

\

We also evaluated the online deployment mode, and identified the subset of feature data types that are not specific to a target project where the training data is collected and thus can be used for other projects (e.g., multi-projects setting). The evaluation results showed that our VP framework identifies ~80% of the evaluated, vulnerability-inducing changes at the pre-submit time with 98% precision and <1.7% false positive ratio.

\

The positive results call for future researches (e.g., using advanced ML and GenAI techniques) to leverage the VP approach or framework for the upstream open source projects managed by communities and are at the same time critical for the numerous software and computer products used by several billions of users in a daily basis.

\

The urgency of this paper stems from its potential societal benefits. Widespread adoption of ML-based approaches like the VP framework could greatly enhance our abilities to share the credibility data of open source contributors and projects. Such shared data would empower open source communities to combat threats like fake accounts (as seen in the Linux XZ util backdoor attack16).

\

Additionally, this MLbased approach can facilitate rapid response across open source projects when long-planned attacks emerge. Sharing information across similar or downstream projects enhances preparedness and reduce response time to similar attacks.

\

Therefore, we call for an open-source community initiative to establish a practice of sharing the credibility database of developers and projects for hardening our open source software supply-chains that numerous computer and software products depend on.

\

REFERENCES

[1] T. Menzies, J. Greenwald, and A. Frank, “Data Mining Static Code Attributes to Learn Defect Predictors,” IEEE Transactions on Software Engineering, 33(1):2-13, 2007. [2] M. Halstead, Elements of Software Science, Elsevier, 1977. [3] T. McCabe, “A Complexity Measure,” IEEE Transactions on Software Engineering, 2(4):308-320, 1976. [4] R. Quinlan, C4.5: Programs for Machine Learning, Morgan Kaufman, 1992. [5] T. Zimmermann, N. Nagappan, H. Gall, E. Giger, and B. Murphy, “Cross-project defect prediction: a large scale experiment on data vs. domain vs. process,” in Proceedings of the Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering (ESEC/FSE), pp. 91-100, 2009. [6] A. Chou, J. Yang, B. Chelf, S. Hallem, and D. Engler, “An Empirical Study of Operating Systems Errors,” in Proceedings of the ACM Symposium on Operating Systems Principles (SOSP), pp. 73-88, 2001. [7] S. Kim, T. Zimmermann, E. J. Whitehead Jr., and A. Zeller, “Predicting Faults from Cached History,” in Proceedings of the ACM International Conference on Software Engineering (ICSE), pp. 489- 498, 2007. [8] F. Rahman, D. Posnett, A. Hindle, E. Barr, and P. Devanbu, “BugCache for inspections,” in Proceedings of the ACM SIGSOFT Symposium and the European Conference on Foundations of Software Engineering (SIGSOFT/FSE), p. 322, 2011. [9] C. Lewis, Z. Lin, C. Sadowski, X. Zhu, R. Ou, and E. J. Whitehead Jr., “Does bug prediction support human developers? findings from a google case study,” in Proceedings of the International Conference on Software Engineering (ICSE), pp. 372-381, 2013. [10] J. Walden, J. Stuckman, and R. Scandariato, “Predicting Vulnerable Components: Software Metrics vs Text Mining,” in Proceedings of the IEEE International Symposium on Software Reliability Engineering, pp. 23-33, 2014. [11] A. Chou, J. Yang, B. Chelf, S. Hallem, and D. Engler, “An empirical study of operating systems errors,” in Proceedings of the ACM Symposium on Operating Systems Principles (SOSP), pp. 73-88, 2001. [12] S. R. Chidamber and C. F. Kemerer, “A Metrics Suite for Object Oriented Design,” IEEE Transactions on Software Engineering, 20(6):476-493, 1994. [13] R. Quinlan, C4.5: Programs for Machine Learning, Morgan Kaufmann Publishers, San Mateo, CA, 1993. [14] A. Chou, J. Yang, B. Chelf, S. Hallem, and D. Engler, “An empirical study of operating systems errors,” in Proceedings of the ACM Symposium on Operating Systems Principles (SOSP), pp. 73-88, 2001. [15] R. Chillarege, I. S. Bhandari, J. K. Chaar, M. J. Halliday, D. S. Moebus, B. K. Ray, and M-Y. Wong, “Orthogonal defect classification-a concept for in-process measurements”, IEEE Transactions on Software Engineering, 18(11):943-956, 1992. [16] R. Natella, D. Cotroneo, and H. Madeira, “Assessing Dependability with Software Fault Injection: A Survey”, ACM Computing Surveys, 48(3), 2016. [17] K. S. Yim, “Norming to Performing: Failure Analysis and Deployment Automation of Big Data Software Developed by Highly Iterative Models,” in Proceedings of the IEEE International Symposium on Software Reliability Engineering (ISSRE), pp. 144-155, 2014. [18] S. R. Chidamber and C. F. Kemerer, “A Metrics Suite for Object Oriented Design,” IEEE Transactions on Software Engineering, 20(6):476-493, 1994. [19] K. S. Yim, “Assessment of Security Defense of Native Programs Against Software Faults,” System Dependability and Analytics, Springer Series in Reliability Engineering, Springer, Cham., 2023. [20] M. Fourné, D. Wermke, S. Fahl and Y. Acar, “A Viewpoint on Human Factors in Software Supply Chain Security: A Research Agenda,” IEEE Security & Privacy, vol. 21, no. 6, pp. 59-63, Nov.-Dec. 2023. [21] P. Ladisa, H. Plate, M. Martinez and O. Barais, “SoK: Taxonomy of Attacks on Open-Source Software Supply Chains,” in Proceedings of the IEEE Symposium on Security and Privacy (SP), pp. 1509-1526, 2023. [22] D. Wermke et al., “”Always Contribute Back”: A Qualitative Study on Security Challenges of the Open Source Supply Chain,” in Proceedings of the IEEE Symposium on Security and Privacy (SP), pp. 1545-1560, 2023. [23] A. Dann, H. Plate, B. Hermann, S. E. Ponta and E. Bodden, “Identifying Challenges for OSS Vulnerability Scanners – A Study & Test Suite,” IEEE Transactions on Software Engineering, vol. 48, no. 9, pp. 3613-3625, 1 Sept. 2022. [24] S. Torres-Arias, A. K. Ammula, R. Curtmola, and J. Cappos, “On omitting commits and committing omissions: Preventing git metadata tampering that (re)introduces software vulnerabilities,” in Proceedings of the 25th USENIX Security Symposium, pp. 379-395, 2016. [25] R. Goyal, G. Ferreira, C. Kastner, and J. Herbsleb, “Identifying unusual commits on github,” Journal of Software: Evolution and Process, vol. 30, no. 1, p. e1893, 2018. [26] C. Soto-Valero, N. Harrand, M. Monperrus, and B. Baudry, “A comprehensive study of bloated dependencies in the maven ecosystem,” Empirical Software Engineering, vol. 26, Mar 2021. [27] R. Duan, O. Alrawi, R. P. Kasturi, R. Elder, B. Saltaformaggio, and W. Lee, “Towards measuring supply chain attacks on package managers for interpreted languages,” arXiv preprint arXiv:2002.01139, 2020. [28] Enduring Security Framework, “Securing the software supply chain: Recommended practices guide for developers,” Cybersecurity and Infrastructure Security Agency, Washington, DC, USA, August 2022. [29] Z. Durumeric et al., “The matter of heartbleed,” in Proceedings of the ACM Internet Measurement Conference, pp. 475-488, 2014. [30] D. Everson, L. Cheng, and Z. Zhang, “Log4shell: Redefining the web attack surface,” in Proceedings of the Workshop on Measurements, Attacks, and Defenses for the Web (MADWeb), pp. 1-8, 2022. [31] “Highly evasive attacker leverages SolarWinds supply chain to compromise multiple global victims with SUNBURST backdoor,” Mandiant, available at https://www.mandiant.com/resources/blog/evasive-attackerleverages-solarwinds-supply-chain-compromises-with-sunburstbackdoor [32] W. Enck and L. Williams, “Top five challenges in software supply chain security: Observations from 30 industry and government organizations,” IEEE Security Privacy, vol. 20, no. 2, pp. 96-100, 2022. [33] “CircleCI incident report for January 4, 2023 security incident.” CircleCI, available at https://circleci.com/blog/jan-4-2023-incidentreport/ [34] K. Toubba, “Security incident update and recommended actions,” LastPass, available at https://blog.lastpass.com/2023/03/securityincident-update-recommended-actions/ [35] D. Wermke, N. Wöhler, J. H. Klemmer, M. Fourné, Y. Acar, and S. Fahl, “Committed to trust: A qualitative study on security & trust in open source software projects,” in Proceedings of the IEEE Symposium on Security and Privacy (S&P), pp. 1880-1896, 2022. [36] D. A. Wheeler, “Countering trusting trust through diverse doublecompiling,” in Proceedings of the IEEE Annual Computer Security Applications Conference (ACSAC), pp. 13-48, 2005. [37] K. S. Yim, I. Malchev, A. Hsieh, and D. Burke, “Treble: Fast Software Updates by Creating an Equilibrium in an Active Software Ecosystem of Globally Distributed Stakeholders,” ACM Transactions on Embedded Computing Systems, 18(5s):104, 2019. [38] G. Holmes, A. Donkin, and I. H. Witten, “WEKA: a machine learning workbench,” in Proceedings of the Australian New Zealnd Intelligent Information Systems Conference (ANZIIS), pp. 357-361, 1994. [39] D. Yeke, M. Ibrahim, G. S. Tuncay, H. Farrukh, A. Imran, A. Bianchi, and Z. B. Celik,, “Wear’s my Data? Understanding the Cross-Device Runtime Permission Model in Wearables,” in Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), pp. 76-76, 2024. [40] G. S. Tuncay, J. Qian, C. A. Gunter, “See No Evil: Phishing for Permissions with False Transparency,” in Proceedings of the 29th USENIX Security Symposium, pp. 415-432, 2020. [41] K. R. Jones, T.-F. Yen, S. C. Sundaramurthy, and A. G. Bardas, “Deploying Android Security Updates: an Extensive Study Involving Manufacturers, Carriers, and End Users,” in Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security (CCS), 551-567, 2020. [42] A. Acar, G. S. Tuncay, E. Luques, H. Oz, A. Aris, and S. Uluagac, “50 Shades of Support: A Device-Centric Analysis of Android Security Updates,” in Proceedings of the USENIX Network and Distributed System Security (NDSS) Symposium, 2024. [43] Q. Hou, W. Diao, Y. Wang, X. Liu, S. Liu, L. Ying, S. Guo, Y. Li, M. Nie, and H. Duan, “Large-scale Security Measurements on the Android Firmware Ecosystem,” in Proceedings of the 2022 IEEE/ACM 44th International Conference on Software Engineering (ICSE), pp. 1257-1268, 2022. [44] Z. Zhang, H. Zhang, Z. Qian, and B. Lau, “An Investigation of the Android Kernel Patch Ecosystem,” in Proceedings of the 30th USENIX Security Symposium, 3649-3666, 2021. [45] K. Serebryany, D. Bruening, A. Potapenko, and D. Vyukov, “AddressSanitizer: A Fast Address Sanity Checker,” in Proceedings of the USENIX Annual Technical Conference (ATC), 2012. [46] E. Stepanov and K. Serebryany, “MemorySanitizer: fast detector of uninitialized memory use in C++,” in Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), pp. 46-55, 2015. [47] J. Metzman, L. Szekeres, L. Simon, R. Sprabery, and A. Arya, “FuzzBench: an open fuzzer benchmarking platform and service,” in Proceedings of the 29th ACM Joint Meeting on European SoftwareEngineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE), pp. 1393-1403, 2021. [48] Z. Y. Ding and C. L. Goues, “An Empirical Study of OSS-Fuzz Bugs,” in Proceedings of the IEEE/ACM 18th International Conference on Mining Software Repositories (MSR), pp. 131-142, 2021. [49] M. Linares-Vásquez, G. Bavota, and C. Escobar-Velásquez, “An Empirical Study on Android-Related Vulnerabilities,” in Proceedings of the IEEE/ACM 14th International Conference on Mining Software Repositories (MSR), pp. 2-13, 2017. [50] S. Farhang, M. B. Kirdan, A. Laszka, and J. Grossklags, “An Empirical Study of Android Security Bulletins in Different Vendors,” in Proceedings of the Web Conference (WWW), pp. 3063-3069, 2020.:::info

Author:

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::

\