Build an AI Agent That Out-Researches Your Competitors

Building Your Own Perplexity: The Architecture Behind AI-Powered Deep Research

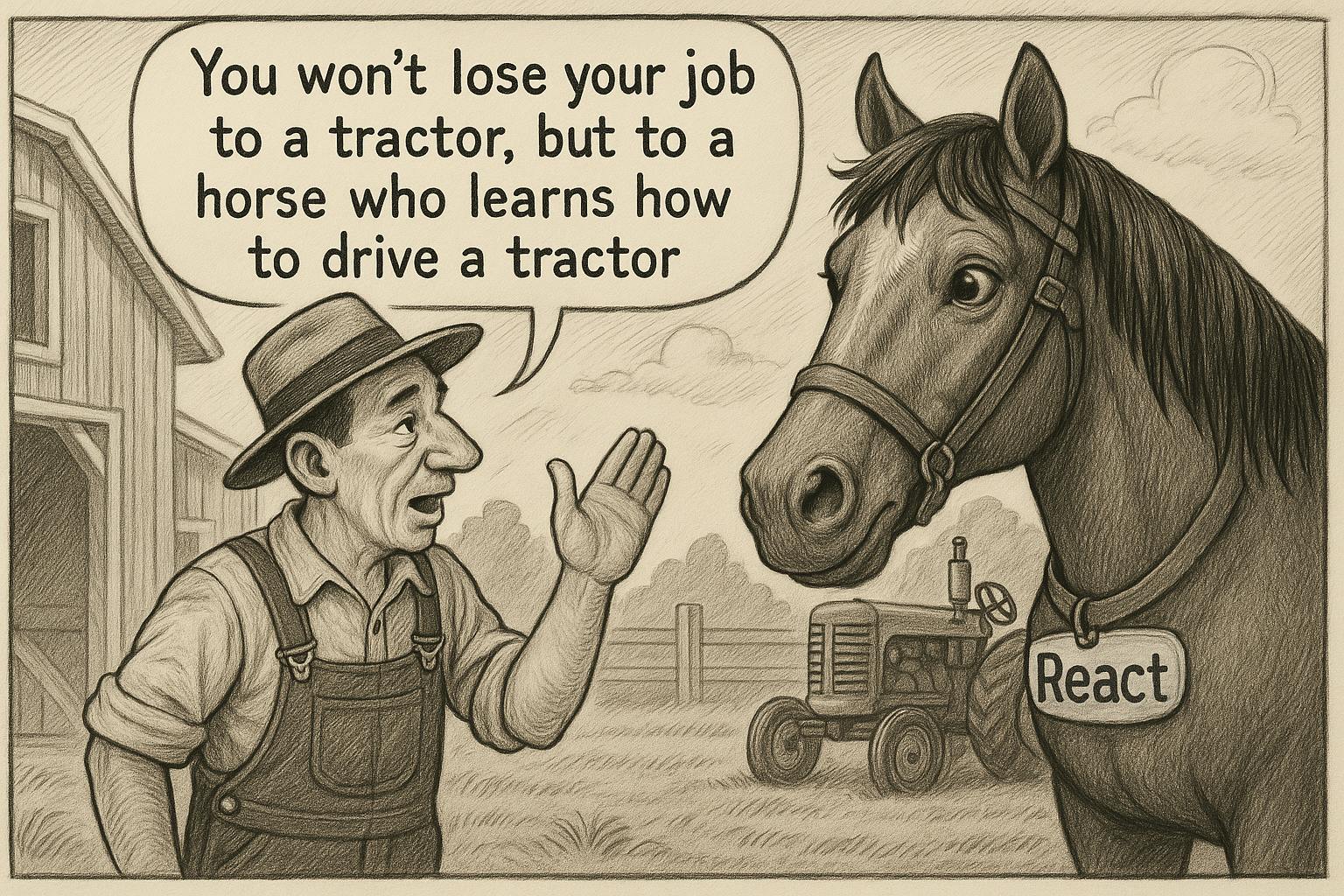

⚠️ A Wake-Up Call for Developers

Many programmers will lose their jobs to AI in the coming years, but not those who learn to build it. Your mission isn’t just to learn how to use ChatGPT or Claude — it’s to become the creator of such systems, to build the next Perplexity rather than just use it.

Open Source: https://github.com/aifa-agi/aifa-deep-researcer-starter/

1. What You’ll Master by Reading This Article

This article provides a complete architectural blueprint for building your own deep research AI agent, similar to Perplexity’s “Deep Research” feature. You’ll learn:

Technical Architecture: How to design a recursive search system using Next.js 15, OpenAI, and exa.ai that actually works in production

Mental Models: Why deep search is a tree structure, not a linear process — and how this changes everything about AI research

Practical Solutions: How to integrate external web search with internal vector knowledge bases to create truly unique content that your competitors can’t replicate

Performance Optimization: How to manage server resources and user experience during long-running AI operations without breaking the bank

Production-Ready Code: Concrete TypeScript implementations using modern tech stack that you can deploy today

By the end of this article, you’ll have a clear understanding of how to build a self-hosted SaaS for deep research that can be integrated into any product — giving you a competitive edge that’s genuinely hard to replicate.

1.1. The Technology Stack That Powers Intelligence

For implementing our deep research AI agent, we use a modern tech stack optimized for production-ready applications with intensive AI usage. This isn’t just a tech demo — it’s built for real-world scale and reliability.

1.1.1. Frontend and Framework

React 19 — The latest version with improved performance and new concurrent rendering capabilities that handle complex AI interactions smoothly

Next.js 15 — Full-featured React framework with App Router, including support for parallel and intercepting routes (perfect for complex AI workflows)

TypeScript 5 — Strict typing for code reliability and superior developer experience when building complex AI systems

1.1.2. AI and Integrations

OpenAI SDK (v4.96.2) — Official SDK for integration with GPT-4 and other OpenAI models, with full streaming support

AI SDK (v4.1.45) — Universal library for working with various AI providers, giving you flexibility to switch models

Exa.js (v1.4.10) — Specialized AI-oriented search engine for semantic search that actually understands context

1.1.3. UI and Styling

Tailwind CSS 4 — Utility-first CSS framework for rapid development without sacrificing design quality

Radix UI — Headless components for creating accessible interfaces that work across all devices

Lucide React — Modern icon library with consistent design language

shadcn/ui — Component system built on Radix UI and Tailwind CSS for professional-grade interfaces

1.1.4. Forms and Validation

React Hook Form — High-performance library for form handling that doesn’t slow down your AI interfaces

Zod — TypeScript-first validation schema with static typing that catches errors before they reach production

Hookform Resolvers — Seamless integration between Zod and React Hook Form

1.1.5. Content Processing

React Markdown — Markdown content rendering with component support for rich AI-generated reports

date-fns — Modern library for date handling in AI research timelines

Why This Stack Matters

This technology stack provides the high performance, type safety, and scalability necessary for creating complex production-level AI applications. Every choice here is intentional — from Next.js 15’s parallel routes handling complex AI workflows, to Exa.js providing the semantic search capabilities that make deep research possible.

The result? A system that can handle the computational complexity of recursive AI research while maintaining the user experience standards that modern applications demand.

Ready to see how these pieces fit together to create something truly powerful? Let’s dive into the architecture that makes it all work.

The AI revolution isn’t coming—it’s here. And it’s creating a stark divide in the developer community. On one side are those who see AI as just another tool to boost productivity, using ChatGPT to write functions and debug code. On the other side are developers who understand a fundamental truth: the real opportunity isn’t in using AI—it’s in building it.

While most developers are learning to prompt ChatGPT more effectively, a smaller group is mastering the architecture behind systems like Perplexity, Claude, and custom AI agents. This isn’t just about staying relevant; it’s about positioning yourself on the right side of the most significant technological shift since the internet.

The harsh reality: Companies don’t need developers who can use AI tools—they need developers who can build AI systems. The difference between these two skills will determine who thrives and who becomes obsolete in the next economic cycle.

This article provides a complete architectural blueprint for building your own AI-powered deep research agent, similar to Perplexity’s “Deep Research” feature. You’ll learn not just the technical implementation, but the mental models and design principles that separate amateur AI integrations from production-ready systems that can become competitive advantages.

What you’ll master by the end:

- Recursive search architecture: How to design systems that think in trees, not lines

- AI-first data pipelines: Integrating external web search with internal knowledge bases

- Agent orchestration: Building AI systems that can evaluate, iterate, and improve their own outputs

- Production considerations: Managing server resources, timeouts, and user experience for long-running AI operations

The goal isn’t to give you code to copy-paste. It’s to transfer the architectural thinking that will let you design AI systems for any domain, any use case, and any scale. By the end, you’ll have the mental framework to build AI agents that don’t just answer questions—they conduct research like expert analysts.

Ready to move from AI consumer to AI architect? Let’s dive into why traditional LLMs need a “guide dog” to navigate the internet effectively.

2. Introduction: Life After ChatGPT Changed Everything

We’re living through one of the most transformative periods in tech history. ChatGPT and other Large Language Models (LLMs) have fundamentally revolutionized how we interact with information. But if you’ve been building serious applications with these tools, you’ve probably hit the same wall I did: models only know the world up to their training cutoff date, and they hallucinate with alarming confidence.

2.1. The Problem: Band-Aid Web Search in Modern LLMs

The teams behind ChatGPT, Claude, and other models tried to solve this with built-in web search. It sounds great in theory, but dig deeper and you’ll find some serious architectural flaws that make it unsuitable for production applications:

Surface-Level Search: The system makes one or two search queries, grabs the first few results, and calls it a day. This isn’t research — it’s glorified Google with a chat interface.

Zero Follow-Through: There’s no recursive deepening into topics. If the first search doesn’t yield comprehensive results, the system doesn’t ask follow-up questions or explore alternative angles.

Garbage Data Quality: Traditional search engines return HTML pages cluttered with ads, navigation elements, and irrelevant content. The LLM has to “dig out” useful information from this digital junk.

Context Isolation: The system can’t connect found information with your internal data, company documents, or domain-specific knowledge bases.

2.2. The Gold Standard: Perplexity’s Deep Research Revolution

Perplexity was the first company to show us how search-LLM integration should actually work. Their approach is fundamentally different from these band-aid solutions:

AI-Optimized Search Engines: Instead of generic Google API calls, they use specialized search systems that return clean, structured content designed for AI consumption.

Iterative Investigation Process: The system doesn’t stop at initial results. It analyzes findings, formulates new questions, and continues searching until it builds a comprehensive picture.

Deep Research Mode: This is an autonomous AI agent that can work for minutes at a time, recursively drilling down into topics and gathering information from dozens of sources.

This is exactly the kind of system we’re going to build together.

2.3. Why This Matters for Every Developer

In the AI-first era, every product is racing to become “smarter.” But simply plugging in the ChatGPT API is just table stakes now. Real competitive advantage comes when your AI can:

- Research current information from the internet in real-time

- Combine public data with your proprietary knowledge bases

- Generate unique insights impossible to get from standard LLMs

- Adapt to your specific business domain and industry nuances

2.4. What You’ll Walk Away With

My goal isn’t to give you code to copy-paste (though you’ll get plenty of that). I want to transfer the mental models and architectural principles that will enable you to:

- Understand the philosophy behind deep AI research

- Design the architecture for your specific use case

- Implement the system using modern stack (Next.js 15, OpenAI, exa.ai)

- Integrate the solution into any existing product

- Scale and optimize the system for your needs

By the end of this article, you’ll have a complete architectural blueprint and production-ready code examples for building your own “Perplexity” — an AI agent that could become your product’s secret weapon.

Important: We’ll study not just the technical implementation, but the business logic too. Why is recursive search more effective than linear? How do you properly combine external and internal sources? What UX patterns work for long-running AI operations? These questions are just as critical as the code.

2.5. For the Impatient: Skip to the Code

For those who already get the concepts and want to dive straight into implementation, here’s the open-source solution we’ll be building: https://github.com/aifa-agi/aifa-deep-researcer-starter

I personally can’t stand articles that give you lots of words and little substance. Feel free to clone the repo and get it running in development mode right now.

Pro tip: You’ll hit timeout limitations (403 errors) on Vercel’s free hosting tier in production, but on localhost you can fully experiment and study the logs to your heart’s content.

Ready to build the future of AI-powered research? Let’s start by understanding why LLMs need a “guide dog” to navigate the internet effectively.

3. Why LLMs Need a “Guide Dog”: The Critical Role of External Search Systems

Here’s a hard truth that many developers learn the expensive way: Large Language Models cannot independently access current information from the internet. This isn’t a bug — it’s a fundamental architectural limitation that requires a sophisticated solution: integration with specialized search systems designed for AI consumption.

3.1. Why Traditional Search Engines Are AI Poison

Google, Bing, and other traditional search engines were built for humans browsing the web, not for machines processing data. When you hit their APIs, you get back HTML pages stuffed with:

- Ad blocks and navigation clutter that confuse content extraction

- Irrelevant content (comments, sidebars, footers, cookie banners)

- Unstructured data that requires complex parsing and often fails

javascript// The traditional approach - a nightmare for AI

const htmlResponse = await fetch('https://api.bing.com/search?q=query');

const messyHtml = await htmlResponse.text();

// You get HTML soup with ads, scripts, and digital garbage

// Good luck extracting meaningful insights from this mess

I’ve seen teams spend weeks building HTML parsers, only to have them break every time a major site updates their layout. It’s not scalable, and it’s definitely not reliable.

3.2. Keyword Matching vs. Semantic Understanding: A World of Difference

Traditional search systems look for exact word matches, completely ignoring context and meaning. A query like “Next.js optimization for e-commerce” might miss an excellent article about “boosting React application performance in online stores,” even though they’re semantically identical topics.

This is like having a research assistant who can only find books by matching the exact words in the title, while ignoring everything about the actual content. For AI agents doing deep research, this approach is fundamentally broken.

3.3. AI-Native Search Engines: The Game Changer

Specialized systems like Exa.ai, Metaphor, and Tavily solve the core problems that make traditional search unusable for AI:

Semantic Query Understanding

They use vector representations to search by meaning, not just keywords. Your AI can find relevant content even when the exact terms don’t match.

Clean, Structured Data

They return pre-processed content without HTML garbage. No more parsing nightmares or broken extractors.

Contextual Awareness

They understand previous queries and the overall research context, enabling truly iterative investigation.

javascript// The AI-native approach - clean and powerful

const cleanResults = await exa.search({

query: "Detailed analysis of Next.js performance optimization for high-traffic e-commerce platforms",

type: "neural",

contents: { text: true, summary: true }

});

// You get clean, relevant content ready for AI processing

// No parsing, no cleanup, no headaches

3.4. Why This Matters for Production Systems

The quality of your input data directly determines the quality of your final research output. AI-native search engines provide:

Data Reliability: Structured content without the need for fragile HTML parsing

Scalability: Stable APIs designed for automated, high-volume usage

Cost Efficiency: Reduced computational overhead for data processing

Accuracy: Better source relevance leads to better final insights

javascript// Hybrid search: external + internal sources

const [webResults, vectorResults] = await Promise.all([

exa.search(query),

vectorStore.similaritySearch(query)

]);

const combinedContext = [...webResults, ...vectorResults];

// Now your AI has both current web data AND your proprietary knowledge

3.5. The Bottom Line: Architecture Matters

AI-native search engines aren’t just a technical detail — they’re the architectural foundation that makes quality AI research agents possible. Without the right “guide dog,” even the most sophisticated LLM will struggle to create deep, accurate analysis of current information.

Think of it this way: you wouldn’t send a brilliant researcher into a library where all the books are written in code and half the pages are advertisements. Yet that’s exactly what happens when you connect an LLM to traditional search APIs.

The solution? Give your AI the right tools for the job. In the next section, we’ll dive into the specific architecture patterns that make recursive, deep research possible.

Ready to see how the pieces fit together? Let’s explore the system design that powers truly intelligent AI research agents.

4. Think Like a Tree: The Architecture of Recursive Search

The human brain naturally structures complex information as hierarchical networks. When a researcher investigates a new topic, they don’t move in a straight line — they develop a tree-like knowledge network where each new discovery generates additional questions and research directions. This is exactly the mental model we need to implement in our deep search AI agent architecture.

4.1. The Fundamental Difference in Approaches

Traditional search systems and built-in web search in LLMs work linearly: receive query → perform search → return results → generate answer. This approach has critical limitations for deep research.

Problems with the Linear Approach:

- Surface-level results: The system stops at the first facts it finds

- No context continuity: Each search query is isolated from previous ones

- Missing connections: The system can’t see relationships between different aspects of a topic

- Random quality: Results depend entirely on the luck of the initial query

The tree-based approach solves these problems by modeling the natural process of human investigation. Each discovered source can generate new questions, which become separate research branches.

4.2. Anatomy of a Search Tree

Let’s examine the structure of a deep search tree with a concrete example:

textNext.js vs WordPress for AI Projects/

├── Performance/

│ ├── Source 1

│ ├── Source 2

│ └── Impact of AI Libraries on Next.js Performance/

│ └── Source 7

├── Development Costs/

│ ├── Source 3

│ └── Source 4

└── SEO and Indexing/

├── Source 5

└── Source 6

First-level branches are the main aspects of the topic that the LLM generates based on analysis of the original query. In our example, these are performance, costs, and SEO. These subtopics aren’t formed randomly — the LLM analyzes the semantic space of the query and identifies key research directions.

Tree leaves are specific sources (articles, documents, studies) found for each sub-query. Each leaf contains factual information that will be included in the final report.

Recursive branches are the most powerful feature of this architecture. When the system analyzes found sources, it can discover new aspects of the topic that require additional investigation. These aspects become new sub-queries, generating their own branches.

4.3. Practical Advantages of Tree Architecture

Research Completeness: The tree ensures systematic topic coverage. Instead of a random collection of facts, the system builds a logically connected knowledge map where each element has its place in the overall structure.

Adaptive Depth: The system automatically determines which directions require deeper investigation. If one branch yields many relevant sources, the system can go deeper. If a direction proves uninformative, the search terminates early.

Contextual Connectivity: Each new search query is formed considering already found information. This allows for more precise and specific questions than isolated searches.

Quality Assessment: At each tree level, the system can evaluate the relevance and quality of found sources, filtering noise and concentrating on the most valuable information.

4.4. Managing Tree Parameters

Search Depth determines how many recursion levels the system can perform. Depth 1 means only main sub-queries without further drilling down. Depth 3-4 allows for truly detailed investigation.

Search Width controls the number of sub-queries at each level. Too much width can lead to superficial investigation of many directions. Optimal width is usually 3-5 main directions per level.

Branching Factor is the average number of child nodes for each tree node. In the context of information search, this corresponds to the number of new sub-queries generated based on each found source.

4.5. Optimization and Problem Prevention

Cycle Prevention: The system must track already investigated directions to avoid infinite recursion loops.

Dynamic Prioritization: More promising branches should be investigated with greater depth, while less informative directions can be terminated earlier.

Parallel Investigation: Different tree branches can be investigated in parallel, significantly speeding up the process when sufficient computational resources are available.

Memory and Caching: Search results should be cached to avoid repeated requests to external APIs when topics overlap.

Execution Time and Server Timeouts: This is another problem that often manifests when implementing deep research, especially if depth exceeds two levels. You could say that increasing the level exponentially increases research complexity. For example, research with four levels of depth can take up to 12 hours.

4.6. The Bottom Line: From Chaos to System

Tree architecture transforms the chaotic process of information search into systematic investigation, where each element has its place in the overall knowledge structure. This allows the AI agent to work like an experienced researcher — not just collecting facts, but building a comprehensive understanding of the investigated topic.

The result? An AI system that thinks like a human researcher, but operates at machine scale and speed. In the next section, we’ll dive into the technical implementation that makes this architectural vision a reality.

Ready to see how we translate this conceptual framework into production code? Let’s explore the technical stack that powers recursive intelligence.

5. The “Search-Evaluate-Deepen” Cycle: Implementing True Recursion

Recursive internet parsing isn’t just a technical feature — it’s a fundamental necessity for creating truly intelligent AI agents. The first page of any search results shows only the tip of the information iceberg. Real insights lie deeper, in related articles, cited sources, and specialized research that most systems never reach.

5.1. Data Architecture for Deep Investigation

In production implementations, the system operates with structured data types that accumulate knowledge at each recursion level:

typescripttype Learning = {

learning: string;

followUpQuestions: string[];

};

type SearchResult = {

title: string;

url: string;

content: string;

publishedDate: string;

};

type Research = {

query: string | undefined;

queries: string[];

searchResults: SearchResult[];

knowledgeBaseResults: string[]; // Vector database responses

learnings: Learning[];

completedQueries: string[];

};

This data structure accumulates knowledge at each recursion level, creating unified context for the entire investigation — exactly what separates professional research from random fact-gathering.

5.2. Stage 1: “Search” — Intelligent Query Generation

The system doesn’t rely on a single search query. Instead, it generates multiple targeted queries using LLM intelligence:

typescriptconst generateSearchQueries = async (query: string, breadth: number) => {

const {

object: { queries },

} = await generateObject({

model: mainModel,

prompt: `Generate ${breadth} search queries for the following query: ${query}`,

schema: z.object({

queries: z.array(z.string()).min(1).max(10),

}),

});

return queries;

};

Key insight: The breadth parameter controls research width — the number of different topic aspects that will be investigated in parallel. This is where the magic happens: instead of linear search, you get exponential coverage.

5.3. Stage 2: “Evaluate” — AI-Driven Result Filtering

Not all found sources are equally valuable. The system uses an AI agent with tools for intelligent evaluation of each result:

typescriptconst searchAndProcess = async (/* parameters */) => {

const pendingSearchResults: SearchResult[] = [];

const finalSearchResults: SearchResult[] = [];

await generateText({

model: mainModel,

prompt: `Search the web for information about ${query}, For each item, where possible, collect detailed examples of use cases (news stories) with a detailed description.`,

system: "You are a researcher. For each query, search the web and then evaluate if the results are relevant",

maxSteps: 10,

tools: {

searchWeb: tool({

description: "Search the web for information about a given query",

parameters: z.object({ query: z.string().min(1) }),

async execute({ query }) {

const results = await searchWeb(query, breadth, /* other params */);

pendingSearchResults.push(...results);

return results;

},

}),

evaluate: tool({

description: "Evaluate the search results",

parameters: z.object({}),

async execute() {

const pendingResult = pendingSearchResults.pop();

if (!pendingResult) return "No search results available for evaluation.";

const { object: evaluation } = await generateObject({

model: mainModel,

prompt: `Evaluate whether the search results are relevant and will help answer the following query: ${query}. If the page already exists in the existing results, mark it as irrelevant.`,

output: "enum",

enum: ["relevant", "irrelevant"],

});

if (evaluation === "relevant") {

finalSearchResults.push(pendingResult);

}

return evaluation === "irrelevant"

? "Search results are irrelevant. Please search again with a more specific query."

: "Search results are relevant. End research for this query.";

},

}),

},

});

return finalSearchResults;

};

Revolutionary approach: The system uses an AI agent with tools that can repeatedly search and evaluate results until it finds sufficient relevant information. This is like having a research assistant who doesn’t give up after the first Google search.

5.4. Vector Knowledge Base Integration

The real power emerges from synergy between external and internal search. For each query, the system simultaneously searches the internet and its own vector knowledge base:

typescriptasync function getKnowledgeItem(query: string, vectorStoreId: string) {

const client = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

const response = await client.responses.create({

model: "gpt-4o-mini",

tools: [

{

type: "file_search",

vector_store_ids: [vectorStoreId],

max_num_results: 5,

},

],

input: [

{

role: "developer",

content: `Search the vector store for information. Output format language: ${process.env.NEXT_PUBLIC_APP_HTTP_LANG || "en"}`,

},

{

role: "user",

content: query,

},

],

});

return response.output_text;

}

5.5. Practical Implementation

In the main research loop, the system queries both sources in parallel:

typescriptfor (const query of queries) {

const searchResults = await searchAndProcess(/* web search */);

accumulatedResearch.searchResults.push(...searchResults);

if (vectorStoreId && vectorStoreId !== "") {

const kbResult = await getKnowledgeItem(query, vectorStoreId);

accumulatedResearch.knowledgeBaseResults.push(kbResult);

}

}

5.6. Stage 3: “Deepen” — Generating Follow-Up Questions

The most powerful feature: the system’s ability to generate new research directions based on already found information:

typescriptconst generateLearnings = async (query: string, searchResult: SearchResult) => {

const { object } = await generateObject({

model: mainModel,

prompt: `The user is researching "${query}". The following search result were deemed relevant.

Generate a learning and a follow-up question from the following search result:

${JSON.stringify(searchResult)}

`,

schema: z.object({

learning: z.string(),

followUpQuestions: z.array(z.string()),

}),

});

return object;

};

5.7. Recursive Deepening

Each found source is analyzed to extract new questions that become the foundation for the next search level:

typescriptfor (const searchResult of searchResults) {

const learnings = await generateLearnings(query, searchResult);

accumulatedResearch.learnings.push(learnings);

accumulatedResearch.completedQueries.push(query);

const newQuery = `Overall research goal: ${prompt}

Previous search queries: ${accumulatedResearch.completedQueries.join(", ")}

Follow-up questions: ${learnings.followUpQuestions.join(", ")}`;

await deepResearch(

/* search parameters */,

newQuery,

depth - 1,

Math.ceil(breadth / 2), // Reduce width at each level

vectorOfThought,

accumulatedResearch,

vectorStoreId

);

}

5.8. Managing Depth and Complexity

The production implementation shows how to manage exponential complexity growth:

typescriptconst deepResearch = async (

/* multiple filtering parameters */,

prompt: string,

depth: number = 2,

breadth: number = 5,

vectorOfThought: string[] = [],

accumulatedResearch: Research = {

query: undefined,

queries: [],

searchResults: [],

knowledgeBaseResults: [],

learnings: [],

completedQueries: [],

},

vectorStoreId: string

): Promise => {

if (depth === 0) {

return accumulatedResearch; // Base case for recursion

}

// Adaptive query formation based on "thought vector"

let updatedPrompt = "";

if (vectorOfThought.length === 0) {

updatedPrompt = prompt;

} else {

const vectorOfThoughItem = vectorOfThought[vectorOfThought.length - depth];

updatedPrompt = `${prompt}, focus on these important branches of thought: ${vectorOfThoughItem}`;

}

// ... rest of implementation

};

5.9. Key Optimizations

Width Reduction: Math.ceil(breadth / 2)at each level prevents exponential growth

Thought Vector: vectorOfThoughtallows directing research into specific areas

Context Accumulation: All results are preserved in a unified data structure

5.10. The Hybrid Advantage in Practice

Creating Unique Content: Combining public data with internal knowledge enables creating reports that no one else can replicate. Your competitors may access the same public sources, but not your internal cases, statistics, and expertise.

Context Enrichment: External data provides currency and breadth, internal data provides depth and specificity. The system can find general industry trends online, then supplement them with your own data about how these trends affect your business.

Maintaining Currency: Even if web information is outdated or inaccurate, your internal knowledge base can provide fresher and verified data.

6. From Chaos to Order: Generating Expert-Level Reports

After completing all levels of recursive search, the system accumulates massive amounts of disparate information: web search results, vector database data, generated learnings, and follow-up questions. The final stage is transforming this chaos into a structured, expert-level report that rivals human analysis.

6.1. Context Accumulation: Building the Complete Picture

All collected data is unified into a single Research structure that serves as complete context for final synthesis:

typescripttype Research = {

query: string | undefined; // Original query

queries: string[]; // All generated search queries

searchResults: SearchResult[]; // Web search results

knowledgeBaseResults: string[]; // Vector database responses

learnings: Learning[]; // Extracted insights

completedQueries: string[]; // History of completed queries

};

This isn’t just data storage — it’s a comprehensive knowledge graph that captures the entire investigation journey. Every insight, every source, every connection is preserved for the final synthesis.

6.2. The Master Prompt: Where Intelligence Meets Synthesis

The quality of the final report directly depends on the sophistication of the generation prompt. The system uses OpenAI’s most powerful model for synthesis:

typescriptconst generateReport = async (

research: Research,

vectorOfThought: string[],

systemPrompt: string

) => {

const { text } = await generateText({

model: openai("o3-mini"), // Most powerful model for synthesis

system: systemPrompt,

prompt:

"Use the following structured research data to generate a detailed expert report:\n\n" +

JSON.stringify(research, null, 2),

});

return text;

};

Key insight: We’re not just asking the AI to summarize — we’re providing it with a complete research dataset and asking it to think like a domain expert. The difference in output quality is dramatic.

6.3. Structured Output: Beyond Simple Summaries

The system doesn’t just create a text summary — it generates structured documents with headers, tables, pro/con lists, and professional formatting, as shown in the result saving:

typescriptconsole.log("Research completed!");

console.log("Generating report...");

const report = await generateReport(research, vectorOfThought, systemPrompt);

console.log("Report generated! Saving to report.md");

fs.writeFileSync("report.md", report); // Save as Markdown

Why Markdown? It’s the perfect format for AI-generated content — structured enough for professional presentation, flexible enough for various output formats, and readable in any modern development workflow.

6.4. Quality Control Through System Prompts

The systemPrompt allows customizing report style and structure for specific needs:

- Academic style for research papers and scholarly analysis

- Business format for corporate reports and executive summaries

- Technical documentation for developer-focused content

- Investment analysis for financial and strategic reports

// Example: Business-focused system prompt const businessSystemPrompt = `You are a senior business analyst creating an executive report. Structure your analysis with:

Executive SummaryKey FindingsMarket ImplicationsRecommendationsRisk Assessment

Use data-driven insights and provide specific examples from the research.`;

6.5. The Intelligence Multiplier Effect

Here’s what makes this approach revolutionary: The system doesn’t just aggregate information — it synthesizes insights that emerge from the connections between different sources. A human researcher might spend 8-12 hours conducting this level of investigation. Our system does it in 10-60 minutes, often uncovering connections that humans miss.

6.6. Production Considerations

Memory Management: With deep research (depth 3-4), the accumulated context can become massive. The system needs to handle large JSON structures efficiently.

Token Optimization: The final synthesis prompt can easily exceed token limits. Production implementations need smart truncation strategies that preserve the most valuable insights.

Quality Assurance: Not all generated reports are equal. Consider implementing scoring mechanisms to evaluate report completeness and coherence.

6.7. Real-World Impact

Time Compression: Hours of human research → Minutes of AI analysis

Depth Enhancement: AI can process and connect more sources than humanly possible

Consistency: Every report follows the same rigorous methodology

Scalability: Generate dozens of reports simultaneously

7. Conclusion: Building the Future of AI Research

Creating a deep research AI agent isn’t just a technical challenge — it’s an architectural solution that can become a competitive advantage for any product. We’ve covered the complete cycle from concept to implementation, showing how to transform the chaotic process of information search into systematic, expert-level investigation.

7.1. Key Architectural Principles

Think in Trees, Not Lines: Deep search is about exploring tree-structured information networks, where each discovery generates new questions and research directions.

Use AI-Native Tools: Specialized search engines like exa.ai aren’t optional — they’re essential for quality research. They return clean data instead of HTML garbage that traditional search APIs provide.

Apply Recursion for Depth: The first page of results is just the tip of the iceberg. Real insights lie in recursive deepening through the “Search-Evaluate-Deepen” cycle.

Combine External and Internal Sources: The synergy between public internet data and private organizational knowledge creates unique content that’s impossible to obtain any other way.

Use LLMs for Both Analysis and Synthesis: AI agents with tools can not only search for information but also evaluate its relevance, generate new questions, and create structured reports.

7.2. Production-Ready Results

The implementation based on Next.js 15, OpenAI, and exa.ai demonstrates that such a system can be built and deployed to production. The code from https://github.com/aifa-agi/aifa-deep-researcer-starter showcases all key components:

- Recursive architecture with depth and width management

- Integration of web search with vector knowledge bases

- AI agents with tools for result evaluation

- Expert report generation with file saving capabilities

7.3. Challenges and Limitations

Server Timeouts: Research with depth greater than 2 levels can take hours, requiring special solutions for production environments.

Exponential Complexity Growth: Each depth level increases the number of queries geometrically, requiring careful resource management.

Source Quality: Even AI search engines can return inaccurate information, requiring additional validation and fact-checking.

7.4. Your Next Steps

Now you have a complete architectural blueprint and real code examples. You can:

Start with Minimal Implementation: Use the basic version from this article for prototyping

Explore the Ready Solution: Clone https://github.com/aifa-agi/aifa-deep-researcer-starterand experiment locally

Adapt to Your Needs: Integrate these principles into existing products and workflows

8. Homework Challenge: Solving the Long-Wait UX Problem

We’ve covered the technical architecture of deep research AI agents, but there’s a critically important UX problem remaining: what do you do when the system works for several minutes while the user stares at a blank screen? As the real implementation from aifa-deep-researcer-starter shows, research with depth greater than 2 levels can take hours, while users see only a static loading screen.

8.1. The Problem: Server Silence Kills Trust

Unlike typical web applications where operations take seconds, deep research AI agents can be silent for minutes. Users get no feedback from the server until the very end of the process. This creates several psychological problems:

- Waiting Anxiety: Users don’t know if the system is working or frozen

- Loss of Control: No way to understand how much longer to wait

- Declining Trust: It seems like the application is broken or “ate” the request

- High Bounce Rate: Users close the tab without waiting for results

Perplexity, Claude, and other modern AI products solve this with interactive animations, progress indicators, and dynamic hints. But how do you implement something similar when your server doesn’t send intermediate data?

8.2. The Developer Challenge

Imagine this technical constraint: your Next.js API route performs a long operation (deep research) and can’t send intermediate data until completion. The frontend only gets a response at the very end. Classic solutions like Server-Sent Events or WebSockets might be unavailable due to hosting limitations or architecture constraints.

How do you create engaging UX under these conditions that will retain users and reduce waiting anxiety?

8.3. Discussion Questions

UX Patterns and Visualization:

- What UX patterns would you apply to visualize deep search processes when the server is “silent”?

- How can you simulate “live” progress even without real server status updates?

- Should you use fake progress bars, or does this violate user trust?

- What animations and micro-interactions help create a sense of a “living” system?

User Communication:

- How do you explain to users why waiting might be long? What texts/illustrations to use?

- Should you show estimated wait times if they can vary greatly (2 to 60 minutes)?

- How do you visualize process stages (“Generating search queries…”, “Analyzing sources…”, “Forming expert report…”)?

- What metaphors help users understand the value of waiting?

Technical Implementation:

- What optimistic UI approaches can be applied without server feedback?

- How do you implement a “conversational” interface that supports users during waiting?

- Can you use local computations (Web Workers, WASM) to simulate progress?

- How do you organize graceful degradation if users close the tab during research?

8.4. Learning from the Best

Study solutions implemented in Perplexity Deep Research, Bing Copilot, Google Search Generative Experience. What can you take from gaming loading screens that hold attention for minutes? How do Netflix, YouTube, and other platforms solve long content loading problems?

Remember: In an era of instant ChatGPT responses, quality waiting can become a competitive advantage. Users are willing to wait if they understand the process value and feel the system is working for them.

9. About the Author and AIFA Project

The author, Roman Bolshiyanov, in his recent publication series, details the tools and architectural solutions he implements in his ambitious open-source project AIFA (AI agents in evolving and self-replicating architecture).

In its current implementation, AIFA already represents an impressive starter template for creating AI-first applications with a unique user interface where artificial intelligence becomes the primary interaction method, while traditional web interface serves as auxiliary visualization.

However, this is just the beginning. The project’s long-term goal is evolution into a full-fledged AGI system where AI agents will possess capabilities for:

- Autonomous evolution and improvement of their algorithms

- Self-replication and creation of new specialized agents

- Competition and cooperation in distributed environments

- Autonomous operation in web spaces and blockchain networks

The deep search covered in this article is just one of the basic skills of future AGI agents that will be able to not only research information but also make decisions, create products, and interact with the real world.

If you’re interested in observing the project’s development and experimenting with cutting-edge AI technologies, don’t hesitate to fork the AIFA repository and dive into exploring the architecture of the future. Each commit brings us closer to creating truly autonomous artificial intelligence.

Ready to build the future? The code is open, the architecture is proven, and the possibilities are limitless. Your next breakthrough in AI-powered research is just a git clone away.