Under the Hood of WebRTC: From SDP to ICE and DTLS in Production

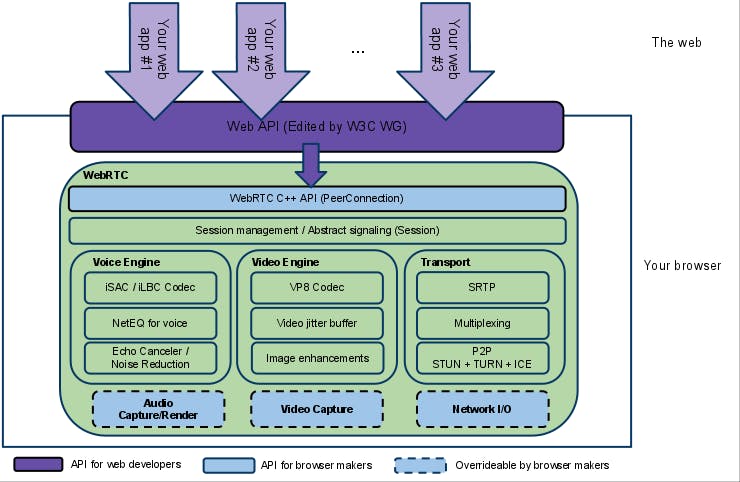

WebRTC enables rich, real-time audio/video (and data) directly in browsers without plugins. Under the hood, it combines multiple layers – a high-level JavaScript API (RTCPeerConnection) sits atop native engines (voice, video, transport) and protocols like SDP, ICE/STUN/TURN, DTLS, and SRTP (see diagram).

At a glance, a WebRTC connection involves two peers (say Alice and Bob) exchanging signaling messages (via your own server/channel) containing Session Description Protocol (SDP) offers/answers and ICE candidates. The peers gather all possible network paths (ICE), choose the best one, perform a DTLS handshake to establish encryption keys, and then begin streaming media over Secure RTP. Below we unpack each step in detail: how an SDP offer/answer is negotiated, how ICE/STUN/TURN works to traverse NATs, and how DTLS bootstraps the secure media path.

Signaling and the SDP Offer/Answer

WebRTC itself does not define signaling. Instead, applications must use some channel (WebSocket, REST, etc.) to exchange connection information. In particular, signaling carries three key pieces of info:

- Session control: “call start” or hang-up commands.

- Network configuration: each peer’s ICE candidates (IP addresses, ports).

- Media capabilities: each side’s SDP offer or answer, listing codecs, resolutions, and transport parameters.

nce Alice decides to call Bob, she creates an RTCPeerConnection (often supplying an iceServers config with STUN/TURN URLs) and acquires her local media (via getUserMedia). She then calls pc.createOffer(), which produces an SDP string describing her end of the session: what audio/video codecs and formats she can send/receive, and (initially) her local network info. Alice immediately applies this to herself with pc.setLocalDescription(offer), and then sends the SDP offer to Bob over the signaling channel.

Bob receives the SDP offer (via the signaling channel), and applies it as the remote description (pc.setRemoteDescription(offer)). He then creates his own answer SDP (pc.createAnswer()), applies it locally (setLocalDescription(answer)), and sends that answer back to Alice. At this point both sides know each other’s media parameters and are “configured” for the session. In code, this looks roughly like:

// Caller (Alice) side:

const pc = new RTCPeerConnection({iceServers:[{urls:'stun:stun.l.google.com:19302'}]});

const stream = await navigator.mediaDevices.getUserMedia({audio: true, video: true});

stream.getTracks().forEach(t => pc.addTrack(t, stream));

const offer = await pc.createOffer();

await pc.setLocalDescription(offer);

signaling.send({ type: 'offer', sdp: pc.localDescription }); // send to Bob

// Callee (Bob) side when receiving the offer:

await pc.setRemoteDescription(receivedOffer);

const answer = await pc.createAnswer();

await pc.setLocalDescription(answer);

signaling.send({ type: 'answer', sdp: pc.localDescription }); // send back to Alice

The SDP itself is a text blob (each line like v=0, o=- ..., m=audio ..., a=rtpmap:111 OPUS/48000/2, etc.). It includes: session info (v=, o=, s=), media descriptions (m= lines for audio/video with port and codec list, and a= attributes for format details), connection data (c=IP lines), encryption fingerprints (a=fingerprint:sha-256 ...) for DTLS, and often a a=group:BUNDLE ... line indicating that multiple media streams will be multiplexed over one ICE transport. Modern browsers use BUNDLE by default, so audio and video share a single UDP/TCP connection.

After this exchange, each side holds two SDP descriptions: its local (what it offered/answered) and its remote (what it received). But no media flows yet – we still need to deal with the network paths.

ICE: Finding a Network Path

Even with SDP exchanged, peers still need to determine how to reach each other through NATs and firewalls. That’s the job of ICE (Interactive Connectivity Establishment). Each peer gathers a list of possible network endpoints, called ICE candidates. There are several types:

- Host: The device’s own local IP address (e.g.

192.168.x.x), typically behind a NAT. - Server-Reflexive (srflx): The public IP address as seen by a STUN server. A STUN request “discovers” how the NAT translates outbound traffic, yielding a routable address.

- Peer-Reflexive (prflx): A candidate learned after an initial connectivity test through a symmetric NAT (less common).

- Relay: An address provided by a TURN server, which will relay all media if no direct path works.

Typically, peers prefer the best candidates first. MDN notes:

“Each peer will propose its best candidates first, making their way down toward worse candidates. Ideally candidates are UDP (fast), though ICE does allow TCP too”.

In practice, your app often supplies a list of STUN/TURN servers in the RTCPeerConnection constructor. For example:

const pc = new RTCPeerConnection({

iceServers: [

{ urls: ['stun:stun1.l.google.com:19302'] },

{ urls: ['turn:turn.example.com:3478'], username: 'user', credential: 'pass' }

]

});

This tells the browser to query the STUN server(s) for reflexive candidates and use TURN if necessary. The pc.onicecandidate event fires repeatedly as new candidates are found (host, srflx, and eventually relay candidates). Each candidate is sent to the remote peer via signaling. The peers keep sending candidates until ICE gathering is complete (signified by pc.addIceCandidate(null)).

Meanwhile, both sides keep exchanging candidates (often incrementally, a process known as Trickle ICE). As soon as the first candidate pair is shared, ICE connectivity checks (STUN binding requests) kick off. In fact, connectivity can begin as soon as the first candidate is sent, but may only finalize when the best mutual candidate is found. The ICE algorithm systematically tries pairing each of Alice’s candidates with each of Bob’s, performing STUN-based pings to see which path actually works. It prefers direct UDP paths first (host→host or host→srflx), only falling back to TURN-relayed paths if needed.

In the end, ICE chooses one candidate pair as the best connection. One peer becomes the controlling agent and finalizes the selection, informing the other peer of the chosen pair via a final STUN message (or updated offer). In the end, ICE chooses one candidate pair as the best connection. One peer becomes the controlling agent and finalizes the selection, informing the other peer of the chosen pair via a final STUN message (or updated offer).

STUN vs TURN

STUN and TURN are the workhorses behind ICE. A STUN (Session Traversal Utilities for NAT) server simply tells a client “here is your public IP and port”. In WebRTC:

“The client will send a request to a STUN server on the Internet who will reply with the client’s public address and whether or not the client is accessible behind the router’s NAT”.

This yields server-reflexive candidates (srflx) which allow peers to try connecting via their public endpoints. STUN itself does not relay any media – it only discovers addresses. If both peers are behind simple NATs, these addresses may suffice for a direct P2P connection.

However, many NATs (e.g. symmetric NATs) will not allow this, or firewalls may block unknown UDP traffic. In those cases, you need TURN (Traversal Using Relays around NAT). A TURN server acts as a relay: both peers send all their media to the TURN server, which forwards it between them. As GetStream’s guide explains:

“TURN servers provide a fallback mechanism when direct peer-to-peer connections cannot be established with STUN. Unlike STUN, which merely facilitates discovery, TURN actually relays media data between peers.”

TURN works like this: a client allocates a relay address on the TURN server (with credentials), and then provides that relay IP/port as a candidate to the other peer. All traffic then goes via the TURN server. TURN guarantees connectivity at the cost of extra latency and bandwidth usage. Indeed, TURN relaying has significant tradeoffs:

“While TURN servers ensure near-universal connectivity, they come with significant tradeoffs: increased latency, bandwidth costs, and infrastructure requirements…”.

In production, a good practice is to only use TURN when necessary. Commonly, apps configure one or two public STUN servers (e.g. Google’s stun:stun.l.google.com:19302) and a TURN server. If ICE yields a host/srflx candidate pair, media goes direct. If ICE has no choice, it will use the TURN relay pair.

The DTLS-SRTP Handshake

Once ICE finds a working path, the two peers have UDP (or TCP) connectivity. The next step is to secure the media. WebRTC mandates encrypted media using DTLS-SRTP. This means the two peers perform a DTLS handshake over the chosen ICE transport. The SDP had already included each side’s certificate fingerprint (e.g. a=fingerprint:sha-256 ...), so the peers can verify they have the expected certificates. From RFC 8827:

“Once the requisite ICE checks have completed, … Alice and Bob perform a DTLS handshake on every component which has been established by ICE. … Once the DTLS handshake has completed, the keys are exported … and used to key SRTP for the media channels.”

Because WebRTC typically uses BUNDLE (one transport for all media), there will usually be a single DTLS handshake. After the handshake, both sides derive the same SRTP keys (via RFC5705 key exporter) and start encrypting/decrypting media with SRTP. The SDP’s a=setup: lines (e.g. a=setup:actpass) coordinate which side acts as DTLS client or server.

Meanwhile, data channels (RTCDataChannel) use SCTP over DTLS. In practice, that means the DTLS handshake also establishes keys for SCTP streams, so that text or binary data sent over data channels is also encrypted.

With DTLS finished, the peer connection is fully set up: any RTP packets (video/audio) sent will be SRTP-encrypted and keyed via the just-completed DTLS handshake. At this point, real media starts flowing.

After DTLS, media (audio/video) is sent over the agreed ICE candidate pair. Thanks to the a=group:BUNDLE line in SDP, multiple media tracks (e.g. microphone and camera) share the same 5-tuple (IP/port/protocol). WebRTC libraries multiplex RTP streams with different SSRCs or MIDs over one ICE/DTLS/SRTP channel. This reduces ports used and simplifies traversal.

For example, Alice might send one UDP 5-tuple carrying both an OPUS audio SRTP stream and a VP8 video SRTP stream. Bob’s browser demultiplexes by SSRC and decodes each media into separate HTMLMediaElements (via trackevents). Periodic RTCP packets (sender/receiver reports, NACKs) also go over the same transport to adapt bitrate and handle packet loss.

Unlike older SIP/VoIP, WebRTC does not use SDES encryption – it always uses DTLS-SRTP. Also, browsers handle things like echo cancellation, jitter buffering, and adaptive bitrate internally, so the app-level code only sees the ready-to-play streams.

Putting It All Together: Connection Steps

In summary, the full WebRTC connection flow proceeds as follows:

- Create PeerConnections and gather media. Each side calls

new RTCPeerConnection(...)(with ICE servers). Media tracks (audio/video) are added to the connection (addTrackoraddStream). - Offer/Answer exchange (SDP). Peer A calls

createOffer(),setLocalDescription(offer), and signals the offer to Peer B. Peer B sets it viasetRemoteDescription(offer), thencreateAnswer()andsetLocalDescription(answer), and signals the answer back. Now both peers have each other’s media capabilities and DTLS fingerprints. - ICE candidate gathering/exchange. Both peers start ICE: calling STUN servers, collecting host, srflx (and possibly relay) candidates. Each found candidate is sent to the other side via signaling. This often happens in parallel with (or shortly after) the SDP exchange (Trickle ICE).

- Connectivity Checks (ICE). As candidates are exchanged, ICE connectivity checks run. Each candidate pair is tested with STUN messages. The peers discover which candidate pair actually works. (UDP candidate pairs are tried first; TCP only if UDP fails.) When a suitable pair is found, ICE reports

connectedorcompleted. - DTLS Handshake. Over the working transport, the two sides perform a DTLS handshake (one client, one server) using the certificate fingerprint from SDP. Once this completes, SRTP keys are generated.

- Media Flow. Encrypted audio and video packets begin flowing (via SRTP) on the selected candidate pair. Each side’s

ontrackhandler delivers incoming media streams for playback. Data channels (SCTP/DTLS) also become available at this point.

Each of these steps can occur somewhat concurrently (e.g. ICE gathering and DTLS checks overlap), but this linear outline captures the major milestones. At the end, a peer-to-peer connection is established with secured media over UDP (or TCP if UDP was blocked).

Common Pitfalls and Debugging Tips

Building WebRTC apps in production often encounters tricky issues. Here are some common pain points and practical tips:

- ICE Connectivity Failures: If

iceConnectionStategoes to failed or disconnected, it often means the peers couldn’t find a working network path. Typical causes: missing or misconfigured STUN/TURN servers, strict symmetric NAT/firewall, or only TCP allowed. To debug, check the ICE candidate exchange: are you actually sending ICE candidates? (Remember, after creating local description you must listen foronicecandidateand send those to the peer.) Also, ensure your STUN server URL is reachable (test it separately if needed). Tools: chrome://webrtc-internals can show ICE state changes and chosen candidate pairs. The WebRTC Stats API (pc.getStats()) can report when candidate pairs succeed or fail.

- SDP and Codec Mismatch: If you see a connection establish but no media (silent audio, black video), a common culprit is mismatched codecs or missing media lines. Inspect the final negotiated SDP in

oniceconnectionstatechange. Ensure that each media section (m=line) has at least one common codec. Browsers today support Opus (audio) and VP8/VP9/H264 (video) out of the box, but if you use a narrow or proprietary codec you may end up with no agreed codec. Also check that the SDP’sa=fingerprintlines match what each side expects (in case you’re manually munging SDP for some reason).

- DTLS Handshake Issues: If media still doesn’t flow and ICE is connected, the DTLS handshake might be failing (stuck). This can happen if the certificate fingerprint in SDP is altered or the packet path is blocked (DTLS requires bidirectional UDP). Check in

chrome://webrtc-internalswhether the DTLS state is “connected”. One can also capture packets (Wireshark) to see if DTLS ClientHello or ServerHello occur. A zero-byte media flow often indicates DTLS failure.

- Media Track/Permission Problems: Sometimes a developer forgets to attach media properly. Ensure you’ve successfully obtained tracks with

getUserMedia()and added them to the connection before creating the offer (or add them on negotiation needed). Also, verify the user granted camera/mic permission (browsers will block otherwise). If a track is muted or ended, no packets will be sent. In code, loggingpc.ontrackand checking videoelements can reveal if streams arrive but aren’t displaying.

- Network Bandwidth and CPU Bottlenecks: High-resolution video can tax devices and networks. Monitor bandwidth: the Stats API can show

bytesSent/Received, packet loss, round-trip time, and availableOutgoingBitrate. If you notice excessive packet loss or CPU load, try reducing video resolution or framerate. WebRTC’s congestion control will reduce quality on its own, but you can also useRTCRtpSender.setParameters()to cap bitrate or enable simulcast/SPs if using an SFU. Running heavy encoding on the CPU (or in JavaScript shaders) can drop frames. Also, as noted above, reliance on TURN relaying double latency and uses twice the bandwidth – whenever possible, minimize TURN usage by ensuring an optimal network path or using ICE restart less frequently.

- Browser Compatibility: Different browsers have minor differences (e.g. SDP semantics “unified plan” vs “plan B”, DTLS version, field names). Stick to standardized SDP and use feature detection (

RTCRtpSender.getCapabilities()). Ensure you aren’t relying on any proprietary APIs. Testing end-to-end on all target browsers (Chrome, Firefox, Safari, Edge) is essential. Common bugs include missingawaiton async calls or not handling renegotiation (e.g. adding tracks after the fact).

- Debugging Tools: As mentioned, WebRTC-internals is invaluable. In Chrome, visit

chrome://webrtc-internalsto see live graphs and logs of each RTCPeerConnection: ICE states, DTLS states, bitrates, packet counts, and stats reports. Firefox’sabout:webrtcoffers a similar summary. You can also callpc.getStats()in JavaScript and log the results. For deeper analysis, Wireshark can decode SRTP/DTLS if you export the keys (Chrome can log DTLS keying material). Simple logging (printingiceConnectionState,connectionState,onicecandidateevents) in your app can quickly show where the process is stuck.

Conclusion

WebRTC glues together multiple protocols (SDP, ICE, STUN/TURN, DTLS/SRTP) to achieve real-time peer-to-peer media in browsers. Understanding each layer helps debug and optimize live apps. We’ve walked through a complete flow: the initial signaling exchange of SDP offers/answers, the ICE candidate gathering (host/srflx/relay) and connectivity checks, the DTLS handshake to secure the transport, and finally the encrypted media streaming. Armed with this knowledge and the tools (browser logs, getStats, packet dumps), a developer can better diagnose issues—be it a missing TURN server, a flipped SDP fingerprint, or network congestion. In production, care must be taken to supply robust STUN/TURN infrastructure, handle renegotiations, and tune performance. But by following the standardized WebRTC path, one can achieve low latency, secure audio, and video between browsers without proprietary plugins.

References: We drew on WebRTC specifications and documentation for details. For signaling, see MDN’s WebRTC connectivity overview. For ICE/STUN/TURN behavior, see MDN and ecosystem guides. For security, the IETF WebRTC Security Architecture (RFC8827**)** describes the DTLS/SRTP requirements. These sources, among others, informed the explanations above.