Why Your A/B Testing Strategy is Broken (and How to Fix It)

Author:

(1) Daniel Beasley

Table of Links

-

Introduction

-

Hypothesis testing

2.1 Introduction

2.2 Bayesian statistics

2.3 Test martingales

2.4 p-values

2.5 Optional Stopping and Peeking

2.6 Combining p-values and Optional Continuation

2.7 A/B testing

-

Safe Tests

3.1 Introduction

3.2 Classical t-test

3.3 Safe t-test

3.4 χ2 -test

3.5 Safe Proportion Test

-

Safe Testing Simulations

4.1 Introduction and 4.2 Python Implementation

4.3 Comparing the t-test with the Safe t-test

4.4 Comparing the χ2 -test with the safe proportion test

-

Mixture sequential probability ratio test

5.1 Sequential Testing

5.2 Mixture SPRT

5.3 mSPRT and the safe t-test

-

Online Controlled Experiments

6.1 Safe t-test on OCE datasets

-

Vinted A/B tests and 7.1 Safe t-test for Vinted A/B tests

7.2 Safe proportion test for sample ratio mismatch

-

Conclusion and References

1 Introduction

Randomized controlled trials (RCTs) are the gold standard for inferring causal relationships between treatments and effects. They are widely applied by scientists to deepen understanding of their disciplines. Within the past two decades, they have found applications in digital products as well, under the name A/B test. An A/B test is a simple RCT to compare the effect of a treatment (group B) to a control (group A). The two groups are compared with a statistical test which is used to make a decision about the effect.

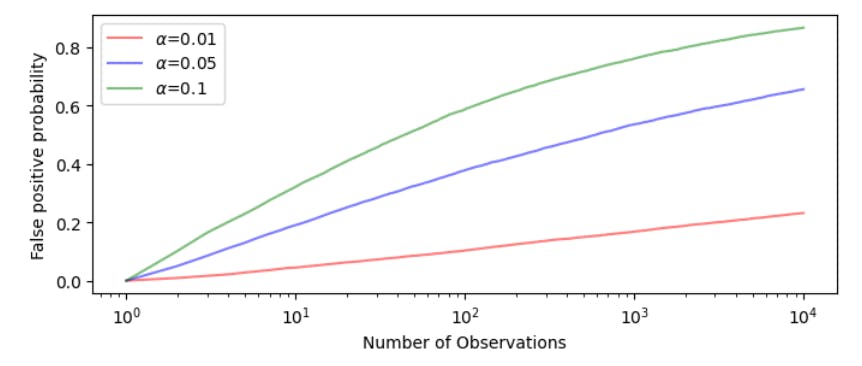

Almost all statistical tests for A/B tests rely on fixed-horizon testing. This testing setup involves determining the number of users required for the test, collecting the data, and finally analyzing the results. However, this method of testing does not align with the realtime capabilities of modern data infrastructure and the experimenters’ desires to make decisions quickly. Newly developed statistical methodologies allow experimenters to abandon fixed-horizon testing and analyze test results at any time. This anytime-valid inference (AVI) can lead to more effective use of experimentation resources and more accurate test results.

Safe testing is a novel statistical theory that accomplishes these objectives. As we will see, safe A/B testing allows experimenters to continually monitor results of their experiments without increasing the risk of drawing incorrect conclusions. Furthermore, we will see that it requires less data than standard statistical tests to achieve these results. Large technology companies are currently exploring AVI in limited capacities, but safe testing outperforms available tests in terms of the number of samples required to detect significant effects. This could lead to wide-scale adoption of safe testing for anytime-valid inference of test results.

This thesis contains 6 sections. Section 2 contains an introduction to hypothesis testing, as well as other statistical concepts that are relevant to the reader. It also explains how the inflexibility of classical statistical testing causes issues for practitioners. Section 3 introduces the concepts of safe testing. Furthermore, it derives the test statistics for the safe t-test and the safe proportion test. Section 4 simulates the performance of the safe statistics and compares them to their classical alternatives. Section 5 compares the safe t-test to another popular anytime-valid test, the mixture sequential probability ratio test (mSPRT). Section 6 compares the safe t-test and the mSPRT on a wide range of online experimental data. Finally, Section 7 is devoted to comparing the safe tests to the classical statistical tests at Vinted, a large-scale technology company.

This paper is